The Data Science Instructional Escape Room—a Successful Experiment

Escape rooms have become a popular choice for group social events and team-building activities. They can be implemented in a variety of ways, presenting participants with mental and sometimes physical challenges that they must overcome for them to meet a particular goal in a prescribed amount of time. They are traditionally thought of as a game where a small group is locked in a room and must use resources in the environment to determine how to exit.

With a variety of tools, techniques, and a design framework aligned with the competency-based education (CBE) model, educators can also take advantage of this idea to engage and motivate students while learning and effectively assess student competencies. An escape room activity can also be implemented online, providing the ability to maximize instructional impact and significantly reinforce learning, while having an opportunity to leverage analytics developed for widely used learning management systems.

The education and training (E&T) staff of the U.S. Department of Defense (DoD) is always looking for ways to provide relevant and up-to-date training for its military and civilian workforces. Its task is to create courses, content, and assessments in preparation for operational situations—a mission-critical responsibility. Over the past decade, the term “data science” quickly turned from being popular in memos and briefs to an essential area of DoD responsibility that was going to involve many facets. Although operations research, cryptography, and other pre-existing areas involved multiple aspects of what is now called data science, the vast and rapidly changing data environment presented new challenges.

In response to the need for talent, many new hires with expertise in STEM fields started in their new roles as data scientists, while others had to adapt to new responsibilities and challenges as converted data scientists in their current organizations. However, the challenge does not stop at hiring and retaining talent; it presses forward to being able to train this talent to apply techniques and develop solutions confidently within a large government organization.

In response to this dilemma, we teamed up in 2017 to create and implement an Instructional Escape Room (IER) as a review activity in the Data Science Fundamentals (DSF) course for some of DoD’s onboarding data scientists. This article outlines some of the DSF course topics, describes aspects of the IER implemented in the course, and discusses observations and outcomes of the experience, along with providing some general guidance about how to implement such activities online.

The Data Science Fundamentals Course

The Data Science Fundamentals (DSF) course was designed to introduce onboarding data scientists to their new role in a unique environment. Many of the students taking the course have backgrounds in mathematics, statistics, or computer science with some type of computer programming experience, but most of them have not applied their skills as data scientists. As a way to introduce a highly interdisciplinary field with applications that can be found in almost any area, the course aims to provide a basic foundation for the students to serve as confident and effective data scientists in a variety of office positions spanning a number of focus areas, including but not limited to counterintelligence, cybersecurity, human resources, security, and business.

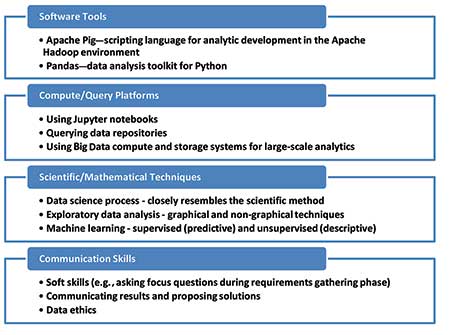

With a wide variety of potential applications in many focus areas, it became evident that it would be nearly impossible to prepare everyone with knowledge for each domain. Instead, the approach was to cover the relatively constant skills and knowledge that a data scientist can apply anywhere in the organization. These constants came down to four overarching areas: software tools, computer/query platforms, scientific/mathematical techniques, and communication skills.

Even with this domain-agnostic approach, there is still a large potential library of topics to cover in only a two-week class. The students are not expected to come away from the course with mastery in any one area, but a solid foundation and more-complete understanding of the skills and knowledge necessary for their role. Figure 1 describes the modules within these topic areas more explicitly.

The course uses a variety of activities and guest lecturers to deliver the training effectively, including student presentations, panel discussions, and group work. The course progresses from more-clearly defined activity objectives to less-structured and more-open-ended activities and discussion.

As an example, one of the first activities is developing a Jupyter Notebook to show understanding of the exploratory data analysis techniques that have been presented. Later on during the course, the students work in groups to develop and propose a solution to a case study problem, with almost no solution being off limits.

This progression demonstrated a major challenge that must continue to be taught and conveyed to the students: addressing vague problems with minimal background information, data (maybe), a requirement, and no instructions. Students would have to reach that place to be a successful data scientist or scientist in any field.

To address this phenomenon even more, we developed an escape room activity to give the students a fun and different way of entering an environment with little to no instructions, while ultimately coming away with a solution, better understanding, and improved teamwork skills.

The DSF Instructional Escape Room

We created an IER as a team review activity to be conducted on the last day of class, after student presentations, followed by a classroom discussion about the experience. Students were randomly divided into three groups and read a scenario, the goal of the activity, and the rules. Within one hour, teams consisting of five to seven people each were to explore a room where they would search for and discover activities to complete, information to interpret, and clues to connect that would help them determine lock combinations and find keys to locked items.

The room contained two keyed lock boxes, one of which was disguised as a dictionary. There was also one three-digit combination lock box, one four-letter combination lock box, and invisible writing on some of the material. One of the keyed lock boxes contained a completion certificate indicating that the teams were successful. Without knowing ahead of time where their actions would lead, the teams used the IER materials to find the location of the key to this box.

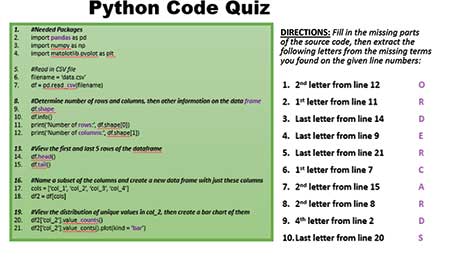

The materials included hints and activities that required using skills learned in class. One of the activities included some Python code with missing terms that were numbered, accompanied by a list of letter positions to be taken from those terms when correctly identified. This activity tested their knowledge of correct Python syntax for doing some basic data manipulation using Pandas methods and functions.

Another activity included properly identifying terms in a long list as related to machine learning, cloud computing, exploratory data analysis, Python packages, or data cleaning. Correctly understanding this vocabulary and the associations with it produced an important clue.

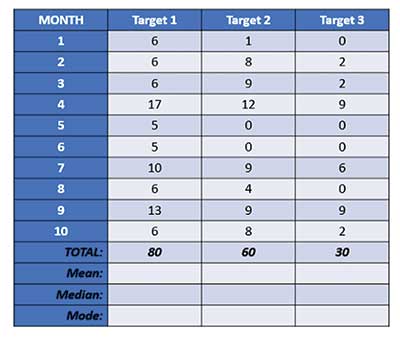

Some of the other activities involved computing statistics, plotting data, and understanding the data science process, all which provided key clues when used correctly.

There were also messages to decrypt, a “To Do” list with suggested activities, and a jigsaw puzzle with an important message. Activities and items were placed on walls, tables, and chairs; inside lock boxes; and on a website whose URL was provided as one of the clues.

Figure 2. Three data sets provided, with one revealing a three-digit lock combination as its mean, median, and mode.

Figure 3. A set of sequence cards found in different locations that reveal a key phrase clue at the bottom when arranged in proper order.

Figure 4. Incomplete block of Python code providing a key phrase clue “ORDER THE CARDS” when replacing missing parts with syntactically correctly code. Incorrect answers produce the wrong letters, providing students with immediate feedback that at least some of their input has to be revisited. In most cases, students can quickly point out where mistakes were made and examine how to correct them.

The Outcomes

A total of nine teams in three different classes were required to participate in the IER over the past year. Although the groups had one hour to complete the experience, most teams finished in between 30 and 40 minutes while a few finished in even less time, while one team took about 50 minutes. Many of the students enjoyed the competitive challenge to see which team could complete the IER first, but all teams were required to finish. They knew they were being timed, and each team wanted to ensure that it did not hold the record for being the last team to finish.

At no point during any of the IER experiences was anyone observed as not participating. Since there was a time limit, teams were motivated to overcome the challenge as soon as possible. To do this, many of the teams took the “divide and conquer” approach—splitting up the work as they discovered activities to be completed.

The IER contained a mix of activities requiring various skill levels, so everyone could contribute in some way, no matter how strong (or weak) they were in different competency areas.

Participants often worked in pairs to complete tasks, one with confident skills needed by the task, and another with equally or slightly less-confident skills but interested in learning while contributing and checking the results. Others worked on making connections by reviewing the combined results of all activities that were completed at a given point in time, and others asked a lot of questions to process the strategies that were used. Communicating with the team to explain their reasoning and justify their results was required for success, and the IER provided a great opportunity to do just that.

The activities were designed to focus on a specific competency, making it easy for both the students and the instructors to gain insight on how well the concepts were understood. Some of the activities were completed more easily than others, while some generated a lot of discussion among team members to determine the correct approach.

In one particular class, two out of the three teams had errors in the activity requiring them to group terms into their associated categories—ones specifically relating to the cloud computing terms. This was noticed as the instructors walked through the rooms to observe the work on the board for the activity. The instructors pointed out to teams that the activity had to be revisited to produce the appropriate clue.

Both teams were able to resolve their issues by working together. More importantly, though, the students were found reviewing the activity after completing the IER and discussing how the concepts incorporated into that specific activity identified an area of weakness. The observation provided suggested that teachers who were teaching this course for the first time may not have delivered the content in this area as effectively as previous instructors.

In all cases, the IER follow-up discussions revealed that the students thoroughly enjoyed the activities and only complained when their team did not finish first. One student said that the activity brought everything together and “made the two weeks worth it.” Some of the students even presented ideas for additional activities using some of the skills they learned, such as requiring more plotting of data that would reveal the four-letter lock combination if visualized in an appropriate manner.

Overall evaluations completed by students after the course often emphasized the activity as a necessary highlight. Based on the comments, it was clear that the students felt empowered by being able to use newly acquired skills in class to produce something meaningful, such as a clue to get their team closer to the goal. Further, participants were able to identify areas of success and areas that needed more attention, while the instructors were able to glean how

comfortable and competent students were, individually or as a team, with specific skills or topics.

Another consequence that was less apparent to students but certainly a desired outcome of the IER was their demonstrated ability to be given a goal, some information, and no directions, and then use critical thinking and problem-solving skills to produce a desired result. In any case, exciting tales of the escape room experience spread throughout the data science community quickly, particularly among those required to take the training.

The Data Science Fundamentals IER was the first implemented among this particular part of the government workforce. Since its success, Nelson has created IERs for mathematics and cryptanalysis courses, both inside and outside the DoD, as well as customized IERs to teach and motivate organizational best practices on knowledge management and cybersecurity assessments.

She has teamed with colleagues such as Crea to deliver workshops for Education and Training professionals on how to create these exciting events for an effective learner experience and to assess student competencies. However, the demand for these IERs as a training tool is ever-growing; the number of requests for customized IERs from other sectors continues to increase as the success stories spread.

Alternate Online Implementation of IERs

While this event was done in class, there are several ways to implement it or other IERs online, although implementing the event through a Learning Management System (LMS) such as BlackBoard is probably the most-beneficial method for instructors, since student activity can be tracked, recorded, and analyzed.

In an LMS, the materials are created as items, no differently from assignments, assessments, or other references provided to students by their instructors. These simple guidelines can be used once the planning phase is complete and the environment is determined, and will help create an instructional escape room that can be reused and easily deployed with minimal work. Nelson’s workshops designed to teach instructors and designers how to create IERs

provide this framework to guide the process.

1. Create individual, separate items as point/score assignments or just as references.

2. Use a setup where students enter correct answers as assignment submissions.

3. Decide time allotment (for each activity) as appropriate; an activity could be untimed.

4. Set unlimited attempts for each activity.

5. Determine order of access to activities/items as appropriate.

6. Set up conditional availability/adaptive release based on 100% score on previous item/activity to be completed in sequential order.

These guidelines ensure that students earn points as they successfully complete each activity and that both the student and instructor will be able to assess their skill level quickly as associated with each activity. From the instructor perspective, when the LMS analytics are enabled, teachers can determine:

- How many attempts were needed until student arrived at correct answers.

- What material should be reviewed.

- Which activities required more time, less time, etc.

- Whether the incorrect answers were pretty close or really far off.

- How far down the trajectory was traversed and the highest level achieved.

These measures enable faculty and staff to track student performance, perform deeper analysis to identify problem areas, and take proactive measures to address any such problems.

Conclusion

IERs provide an effective, interactive, and engaging learning experience that motivates students to understand course content. They can be implemented in various formats and offer the opportunity to strengthen team-building, problem-solving, and critical thinking skills.

When well-designed, IERs can also be used to help both the instructor and student assess mastery levels of competencies associated with included activities.

This is particularly true when IERs are implemented online, such as through a Learning Management System. In this situation, individual student activity—such as amount of time spent on each activity, number of visits to material, and number of attempts before successful completion of activities—can be documented and analyzed using the inherent data collection and analytics. Both the instructor and the learner can quickly identify problem areas and gauge strengths and weaknesses.

As with any set of effective instructional materials, designing meaningful IERs entails a certain level of creativity and commitment. They require a significant time investment, availability of resources, patience, and support from other faculty and staff. Although the process can be time-consuming and the required amount of creativity demanding, the benefits reaped by students and instructors are well worth the effort.

About the Authors

Jason Crea is a data scientist with the Department of Defense and holds a BS in mathematical statistics from Ohio University.

Valerie Nelson is an applied research mathematician at the U.S. Department of Defense (DoD). She recently served as department head for the mathematics, cryptanalysis, and data science curricula at the National Cryptologic School (NCS) and was a lead for NSA’s Data Science Literacy Initiative and the IC’s Augmenting Intelligence using Machines (AIM) effort. She has served as adjunct faculty at George Washington University, Howard University, Morgan State University, and Prince George’s Community College, and recently completed a one-year assignment with the Instructional Technology Team at the University of Maryland Baltimore County. She received her bachelor’s and master’s degrees in mathematics from Morgan State University, her PhD in mathematics from Howard University as an Alliance for Graduate Education in the Professoriate (AGEP) and Preparing Future Faculty (PFF) fellow, and a Graduate Certificate in data science from the University of Maryland College Park.