Statisticians Introduce Science to International Doping Agency: The Andrus Veerpalu Case

In March 2013 the international Court of Arbitration for Sport (CAS) made an unprecedented decision. The case involved Andrus Veerpalu, an Estonian Olympic Gold Medalist in cross‐country skiing who tested positive for human growth hormone (hGH) and was deemed by the World Anti‐Doping Agency (WADA) to be a doper. As a consequence, Veerpalu was barred from competition for three years by the International Ski Federation (FIS). He appealed. CAS reversed the FIS decision and Veerpalu was acquitted of all charges. The court’s rationale was not the test itself, but the faulty use of statistics in interpreting the test results. This was the first and remains the only doping case WADA has had reversed, despite the many other appeals by athletes, some of which included statistical as well as technical and biological challenges.

The authors participated in the CAS hearing at its headquarters in Lausanne, Switzerland, in June 2012. Krista Fischer was a member of the Veerpalu team and presented statistical arguments to the court. Donald A. Berry was scientific counsel to Veerpalu’s legal counsel and able to ask questions of experts from both sides.

The Status of Doping Testing

The purpose of doping testing in sport is to detect the presence of banned performance‐enhancing drugs in blood or urine samples from athletes. The use of drugs to enhance performance is considered unethical by most international sports organizations, including the International Olympic Committee.

The reasons performance‐enhancing drugs are banned include their health risks, the need to level the playing field for all athletes, and the exemplary effect of drug‐free sport for the public.

To coordinate anti-doping activities, WADA was established in 1999 as an international independent agency composed and funded equally by the sport movement and governments of the world. Its key activities include scientific research, education, development of anti‐doping capacities, and monitoring the World Anti‐Doping Code (Code), the document harmonizing anti‐doping policies in all sports and all countries. The Code is adopted by many sports organizations, including international sports federations.

International sports federations that have adopted the Code have testing jurisdiction over all athletes who are part of their member national federations or who participate in their events. All member athletes must comply with any request for such testing. The Code specifies the general standards for such testing, as well as the prohibited substance list.

In case of a positive doping test result, so‐called adverse analytic finding (AAF), the Code specifies the right to a fair hearing for the athlete. If the athlete admits to doping use or if the hearing results in the decision that the athlete has violated the anti‐doping rule, sanctions will be made against the athlete. The current Code specifies that the usual sanction after first anti‐doping violation is two years of ineligibility for the athlete to participate in any events organized by the corresponding international sports federation or its member organization. There are, however, circumstances where the period of ineligibility can be shortened or prolonged. The athlete has a right to appeal an AAF. Appeals of international‐level athletes are considered by CAS.

The Veerpalu Case

Veerpalu is Estonia’s most successful cross‐country skier. He won two gold medals at the Winter Olympics (2002 and 2006, both in the men’s 15 km classical), as well as gold medals in two world championships (2001 and 2009).

On January 29, 2011, Veerpalu was subject to an out‐of‐competition doping examination in Otepää, Estonia, performed by a WADA doping control officer. He provided samples that were then analyzed for hGH using isoform differential immunoassays by a WADA‐ accredited laboratory. The test resulted in an adverse analytical finding of recombinant or exogenous human growth hormone (recGH), established in the original blood sample (A‐sample) and the confirmation blood sample (B‐sample). The results were made public in April 2011.

Although Veerpalu denied doping, the FIS Doping Panel imposed a sanction on him of three years ineligibility, effective from February 2011. The athlete’s team appealed the FIS decision to CAS. The CAS hearing took place at its headquarters in Lausanne, Switzerland, in June 2012 and was followed by an exchange of additional written submissions between July 2012 and February 2013.

In March 2013, the CAS panel made its final decision: Veerpalu was acquitted and all charges against him were dropped. The CAS media release provides the reasoning:

We will provide an overview of the arguments that led to this reversal, demonstrating the egregiously flawed inferential process that led to WADA’s AAF.

Scientific Issues in Doping Tests

The question at issue is whether the athlete used a banned substance. The evidence presented might be eyewitness testimony. For example, someone may testify to seeing the athlete inject or swallow a banned substance, or what is believed to be a banned substance. More typically, as in the Veerpalu case, determining whether an athlete used a banned substance relies on tests of the athlete’s blood or urine that are claimed to detect the substance or a characteristic of the substance in the athlete’s body fluid that can result only by administration of a particular banned substance or of a banned class of such substances.

In making inferences, there is a fundamental distinction between exogenous and endogenous substances. The former include some steroids that are not naturally occurring in humans. Their presence is evidence of use. Endogenous substances such as testosterone and human growth hormone are naturally occurring and their presence is normal. But high concentrations are abnormal and associated with use. But how high? What cutpoint should be set to balance the need for fairness in sport and ruining the athlete’s reputation and career?

There are many considerations and many sources of uncertainty and variability in data used to establish a cutpoint—called a “decision limit” or DL by WADA. One is the testing process itself. Another is the collection process. Another involves the possibilities for sample contamination and handling. Another is the variability within each individual depending on age, gender, time of day, season, diet, exercise, etc. Another is the selection process for databases to be used.

A rigorous scientific approach is exemplified by regulatory assessment of diagnostic tests in medicine. The test is used in experimental conditions that simulate the actual use of the test in practice. In the setting of doping tests, subjects are athletes whose use or not is known by the investigators, including substance, dose, and time since administration. The subjects and those who collect samples should be blinded as to use. The experiments must have protocols that prospectively set down the statistical analyses and sample sizes in the user and non-user categories. The need for a protocol is spelled out in a 2012 Journal of the National Cancer Institute article titled “Multiplicities in Cancer Research: Ubiquitous and Necessary Evils.” Nothing even approaching this level of rigor is present in doping testing, as we describe below.

Making inferences about doping requires rigorous experiments. These experiments must address substance used, its dose, and time since administration. The same is true regarding covariates such as age and diet. In addition, longitudinal results based on similar tests on the same athlete over time should play a role. But set these issues aside for simplicity’s sake. Regard the results of the experiment or experiments as having two distributions, one for users and the other for non‐users. Any particular DL defines a true‐positive rate from the first distribution, the test’s “sensitivity,” and a true‐negative rate from the second distribution, the test’s “specificity.” The test’s false‐positive rate is 1 minus specificity. Nonparametric estimates of these quantities from the experiment are natural: the proportions of the cases above or below the DL. Their accuracy depends, of course, on the respective sample sizes. Their credibility depends on the rigor in designing and conducting the experiments.

No test result can categorize with certainty an athlete as a doper … or not. For any particular DL, false positives and false negatives are inevitable. Their rates are determined by the DL and have enormous implications for athletes and sport generally. Doping can have serious implications for the athlete’s health, including early death. On the other hand, many athletes attempt suicide upon being banned from sport, and at least one attempt was successful: 31‐year‐old rugby star Terry Newton.

This is an important societal issue. No group of technicians cloistered in their laboratories should make these life or death decisions. Unfortunately, politicians are clueless about the doping testing process and abdicate their responsibility to represent their constituents by simply accepting the testing process as foolproof. To compound the problem, there is virtually no funding to carry out appropriately rigorous experiments to determine sensitivity and specificity of doping tests.

The hGH Doping Test

hGH is a hormone synthesized and secreted by cells in the anterior pituitary gland located at the base of the brain. It is naturally produced in humans and necessary not only for skeletal growth, but also for recovering cell and tissue damage.

The major challenge in developing a doping test for hGH is that the level of total concentration of hGH in a human’s blood varies naturally and substantially over the course of time. There are approximately 10 hGH pulses during any 24‐hour period, so the total hGH concentration will differ markedly depending on the time of measurement. For this reason, developing a test based merely on the measurement of the total hGH concentration is, in practice, impossible.

However, the developers of the doping test claim the administration of exogenous hGH changes the proportions of various hGH isoforms in an individual’s blood by increasing the proportional share of one hGH isoform compared to others. Even though the levels of total hGH concentration will vary substantially, it is assumed the ratio between the relevant types of hGH isoforms measured by the test will naturally remain relatively stable. If this is correct, then the administration of exogenous hGH can be detected from an elevated ratio of the relevant hGH isoforms.

The testing is done by using two distinct sets of reactive tubes coated with two combinations of antibodies, which are referred to as Kit 1 and Kit 2 (or the “kits”). The decision limits determine the thresholds needed to assess whether an athlete’s blood contains natural or doped levels of hGH.

Given the above description, one needs sufficient evidence of the following:

- The isoform ratio measured by the test is stable and does not depend on the actual hGH concentration and therefore is not affected by timing of the test with respect to the normally occurring hGH pulses

- The isoform ratio does depend on exogenous hGH administration, whereas the association is sufficiently strong to enable distinguishing a doping user from a non‐user

- The specificity of the test is high and there is enough evidence to reject a hypothesis of an unacceptably low specificity

- Decision limits should depend on any characteristics of the athlete or the testing circumstances that affect the isoform ratio

In addition, the decision limits need to be set so that:

Further, the test and its validation data should be examined by an independent panel of experts with no conflicts of interest or for whom there may be a perception of conflict.

Scientific (Statistical) Evidence Regarding Validity of the hGH Isoform Ratio Test

The Main Publication

In the CAS hearing, WADA representatives argued that the testing procedure used in the Veerpalu case was published by Martin Bidlingmaier and colleagues in Clinical Chemistry, a leading peer‐reviewed journal, in 2009, and that constituted sufficient review for the legal process. This publication addresses the following:

- Biochemical reasoning, explanation of the testing principle with particular reference to recGH and pitGH, motivating the ratio of two concentrations as the variable of interest.

- Dynamics of the isoform ratio level after hGH injection on 20 test subjects (who were recreational athletes), presented as % change from the baseline with absolute values not provided.

- Distribution of the isoform ratio in two samples of blood donors (n=76 and n=100), characterized by the mean, median, range, and 95% confidence interval for the mean. The isoform ratio varies in the range 0.08–1.32 for both Kits 1 and 2.

The purpose of the Bidlingmaier article was to describe a method that could be used as a doping test. No actual testing procedure was suggested and no decision limits were proposed.

Initial Study (2009) to Develop the DL

The samples for this study came from the IAAF World Championships in 2009 and from the German National Anti‐Doping Agency. There were 300 samples (197 males, 103 females). Only samples with an hGH concentration of > 0.05 ng/mL for either recGH or pitGH were included. This reduced the number of relevant samples for males to 109 Caucasians and 45 Africans for Kit 1 (and to 117 and 48, respectively, for Kit 2). Further, the parametric distribution of the ratio in the four subsamples (determined by ethnicity and gender) was assessed by testing the fit of various parametric distributions. WADA representatives claimed that a lognormal distribution gave the best fit.

Next, the DL was estimated as the 99.99 percentile of the fitted lognormal distribution in each subsample. They decided that the final DLs for all male samples to be tested would be those estimated from African males as these were higher than the corresponding estimates for Caucasian males.

WADA scientists claim that the test with the resulting DL has a specificity of at least 99.99%. The claimed false‐positive rate of less than 1 in 10,000 is quite remarkable from a sample size of less than 200! Clearly, it relies strongly on the parametric form of testing results.

The Verification Studies

Two subsequent verification studies were conducted, first in 2009–2010 and a second in 2010–2011. The samples for these studies were, in fact, routine doping control samples from both in and out of competition. In total, 3,547 samples were tested with Kit 1 and 617 were tested with Kit 2. Before the distribution of the isoform ratio was assessed, a number of samples were excluded. First, only samples with pitGH concentration above 0.05 ng/ml and with recGH concentration above 0.1ng/ml were used. The threshold for recGH was raised in comparison with the initial study, resulting in 25% of the samples being dropped from further analysis. Next, about 10 outlying (high) values of the ratio were dropped as being “suspicious” (claimed to be samples of doping users or problematic for technical reasons). Already, the data of the first verification study did not fit the assumed log‐normal distribution for Kit 2 and therefore a gamma distribution was used instead. As the estimated 99.99 percentiles of the fitted gamma distributions were lower than the initial DL, the initial DL was claimed to be validated.

At the hearing, Berry asked the statistician representing WADA the following rhetorical question: “So you’re saying that you cut off the tail of the distribution and then claimed that it had a small tail, is that correct?”

WADA scientists did not reveal the change to the gamma distribution at the hearing, but only in a subsequent written submission, after the athlete’s scientific experts had been given access to the data of the verification studies and Fischer demonstrated the lognormal distribution assumption is rejected (by the Kolmogorov‐Smirnov test). However, the gamma distribution fitted only the Kit 2 data, which contradicts the assumption that the distribution of the underlying quantity is independent of method used.

Most importantly, none of the analytic processes used by WADA were prospective—at least WADA has never provided a protocol or prospective statistical analysis plan. As is well known, this deficiency leads to biases and irreproducible research. Moreover, WADA operates behind closed doors, so it is impossible for someone else to reproduce their analyses, especially not to properly account for the multiplicities involved. Their worry is that they don’t want to reveal too much information about their processes to athletes who may be trying to game the system. But this concern is subordinate to the essential openness of science.

Lack of Scientific Process

The initial study to develop the DL of the test and the subsequent verification studies did not use alternative means of confirming the actual doping use status of the tested athletes. There is little or no evidence about the distribution of the isoform ratio in doping users, or even a description of the shape of the distribution. Therefore, determining the sensitivity of the testing process is impossible, especially as it depends on the exact substance used, its dose, etc. So there is no way to properly separate doping users from non‐users. For most of the observed outlying values of the isoform ratio, there is no external evidence of whether the high values were obtained by doping use or are naturally occurring outlying values of the isoform ratio. As indicated above, the isoform ratio is not “constant” and its distribution is highly skewed, with outliers in the right‐hand tail.

The assumption that the isoform ratio does not depend on the actual concentration of recGH or pitGH was apparently not tested in either the initial study or the verification studies.

The initial study to develop the DL was apparently conducted without any pre‐approved protocol and without a statistical analysis plan. The distribution fitting was ad hoc, driven by someone’s subjective assessment of the empirical distribution in the small data set. Therefore, even the form of the parametric distribution is at best an assumption. Moreover, the resulting DL estimates were provided as point estimates that did not incorporate the sampling variation of the estimated distribution parameters used to estimate the quantile. Given the small sample sizes, it is impossible for their assumption of a parametric distribution to appropriately account for extreme values.

The verification studies used a mixture of samples from a heterogeneous mix of athletes. Despite ethnicity affecting the results in the initial study, there was no distinction made between Africans and Caucasians in the verification study. Moreover, it is likely the verification study contains multiple samples from the same individuals, a fact not considered in the analysis. There was no covariate assessment or subgroup comparison conducted in the verification study, thus it is unknown how age, for instance, would affect the result. This is important because it is well known that hGH concentration in blood is highly dependent on age.

Test Results, CAS Decision, and WADA Reaction

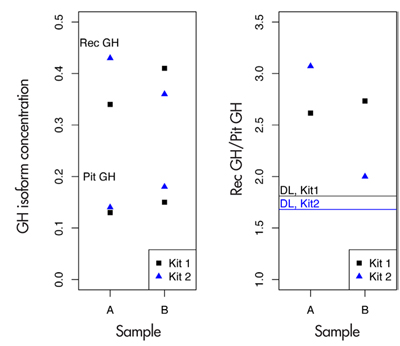

Veerpalu’s hGH isoform ratio test results were as follows: 2.62 and 2.73 for the A and B samples when measured with Kit 1; 3.07 and 2.00 when measured with Kit 2. The hGH concentrations themselves were found to be relatively low, with pitGH measured from the A sample as 0.13 and 0.14 with Kits 1 and 2, respectively, with the recGH measurements being 0.34 and 0.43. In the B sample, pitGH values were 0.15 and 0.18 with Kits 1 and 2, with recGH values 0.41 and 0.36. Figure 1 shows these results graphically.

Figure 1. hGH test results of Andrus Veerpalu. The horizontal lines in the right panel indicate the decision limits in the ratio for kits 1 and 2.

There were atypical aspects of the Veerpalu case and the timing of the testing. First, Veerpalu was considerably older (at 41 years) than typical elite athletes. Also, the testing was conducted in a so‐called alpine chamber, with hypobaric conditions similar to that in mountains. Just before the blood sampling, Veerpalu performed an extremely strenuous training session of more than three hours of intense skiing. The handling of the blood sample deviated from what was the recommended procedure for the WADA hGH test; in particular, there was a longer than recommended delay from blood sampling to centrifuging the sample. The dependence of the isoform ratio on these factors is unknown and so could not have been considered by WADA. There is no evidence regarding whether they could have contributed to relatively high recGH or relatively low pitGH values in Veerpalu’s samples. Also, the pulsatile nature of hGH secretion may create situations in which one can observe an outlying isoform ratio during certain moments of the day. No adequate studies have been conducted to address these issues.

In its final decision, CAS accepted the arguments of Veerpalu’s scientific experts that there is insufficient evidence on whether the DL used for the test is valid:

The decision included the following: “In this case, the Appellant has raised concerns regarding the validity of the Test and its application in his case that were neither spurious nor fabricated.”

Reaction to the CAS Decision

The reactions to the CAS decision were mixed. The only reaction that was unanimous (except for WADA)

was to blame WADA.

In a story titled “Andrus Veerpalu Wins Doping Appeal,” the Associated Press reported the following:

The Associated Press also reported the reaction of the NFL Players’ Association:

In an article titled “How WADA Dropped the Ball on the Veerpalu Doping Case,” FasterSkier reported:

“There were many factors in this case which tend to indicate that Andrus Veerpalu did himself administer exogenous hGH,” the press release noted, but continued on to say that there was insufficient statistical proof to ban him.

Has this ever happened before?

Gary Wadler, a New York doctor who used to work on the committee to select the World Anti‐ Doping Agency (WADA) Prohibited List, wasn’t familiar with the case, but he was certainly surprised.

“I have not heard of that, no,” he told FasterSkier of a successful appeal on the basis of math.

CAS is sure that Veerpalu administered some form of hGH, perhaps like Genotropin … they just can’t prove it.

And the doctor who created the test, which compares ratios of differently structured molecules of hGH and had served as a witness in the CAS hearings, was no less flummoxed.

“I could not understand from the information I have what the criticism was,” Dr. Martin Bidlingmaier told FasterSkier in an interview from his clinical offices at the University Clinic of Munich. “I know the findings in this case, and in my opinion it looked quite clear.”

No matter who you ask, as long as they are not affiliated with Veerpalu, you’ll hear that the cat was in the bag; Veerpalu was doping. So how could the case have gone wrong, with a test that has been peer‐reviewed and can easily detect an unnatural ratio of the different forms of hGH?

…

As for Bidlingmaier, who developed a test that he thought would catch people just like Veerpalu, he’s still marveling at how WADA failed to do so.

“All I know is from the media and the website of CAS,” he told FasterSkier. “As far as I understood, the decision made very clear that the test itself is scientifically sound and there were no doubts with respect to all the labwork. The discussion arose only around the statistical model used around the decisions they make, between positive and negative! I’m a physician and a biochemist, I’m not a statistician, and it’s their world, their arguments. I have no idea if it’s valid or not.”

In an article titled “After Veerpalu Ruling, All Eyes on WADA,” Estonian Public Broadcasting indicated thus:

This same article quotes one of the most respected authorities in the doping community, Don Catlin, founder of the UCLA Olympic Analytical Laboratory, the world’s largest testing facility of performance‐ enhancing drugs:

Catlin said the Veerpalu case [was] “scary,” saying it showed that WADA, which he said is underfinanced, can make big mistakes, and innocent people can be found guilty. He added, though, that he wasn’t up-to-date with the case and couldn’t evaluate whether charges against Veerpalu were merited in light of the court’s emphasis that, despite a not-guilty verdict, data indicated that the skier had broken doping rules.

Discussion

Doping tests should not be used in practice until they have been shown to be scientifically valid, and that has not yet occurred in hGH testing. At minimum, doping tests should have to meet the scientific standards for medical diagnostics. Actually, standards in doping testing should be higher. Most diagnostics have follow‐up tests and procedures. For example, an imaging method may identify a probable cancer. Follow‐up procedures, including other imaging methods but also possibly a biopsy, will resolve the issue. In doping, the final decision hangs on the test result alone … at least given the current attitude toward doping testing.

The analysis of doping data must be subjected to the strictest standards of reproducible research. This includes having a protocol and following the protocol, and the protocol must specify detailed procedures for collecting the data. Doping research cannot be exempt from the generally accepted view that science must be open. Science in a closet is not science. Doping research must be published, including data,along with the protocols and prospective data analysis plans for conducting the research.

Doping testing is currently in the hands of a single worldwide agency that answers to no one except CAS, and even if exonerated, the athlete is forever tainted. This is in sharp contrast to medical product development, say, where regulators around the world ensure a high level of science before drugs and medical devices can be used in practice. National governments must take an active role in scrutinizing doping testing and addressing what is acceptable from the perspective of false‐positive and false‐negative rates, for example. Medical doctors take an oath to “first, do no harm.” Governments must face up to the fact that doping testing can ruin people’s lives, possibly inappropriately. Finally, as part of their obligation to protect their citizens, governments must provide funding for experiments necessary to adequately and scientifically establish the accuracy of testing processes.

Our presentation in this article has focused on the data analysis of hGH in the Veerpalu case. Our comments apply to doping more generally. But there are several enormously important issues in doping testing we have not addressed. The two most important of these are the inferential issues associated with multiplicities and “the prosecutor’s fallacy.” Regarding the former, sometimes there are multiple tests within individuals (such as in the Tour de France where winners are tested many times, creating an obvious bias), but there are always many individuals who are tested. So the overall false‐positive rate is much larger than that for a particular test. The prosecutor’s fallacy is an inappropriate flip of the conditional in calculating probabilities, analogous to the nearly universal but erroneous flip in interpreting p‐values. Namely, when an extreme test result has a particular (small) probability of being observed, that probability is taken to be the probability of innocence. Both these issues are developed in detail in the context of doping testing in Berry’s 2008 Nature article, “The Science of Doping.”

Author’s Note: The other scientific experts representing Veerpalu at the CAS hearing as well in various written submissions were Sulev Kõks, Anton Terasmaa and Douwe DeBoer. The scientific discussion was made possible by the team of attorneys of law defending Andrus Veerpalu: Aivar Pilv, Ilmar-Erik Aavakivi, Thilo Pachmann and Lucien Walloni.

Further Reading

Baggerly, K. A., and D. A. Berry. 2011. Reproducible research. Amstat News 403:16–17.

Berry, D. A. 2012. Multiplicities in cancer research: Ubiquitous and necessary evils. Journal of the National Cancer Institute 104:1125–1133.

Berry, D. A. 2008. The science of doping. Nature 454:692–693.

Berry, D. A., and L. A. Chastain. 2004. Inferences about testosterone abuse among athletes. CHANCE 17:5–8.

Bidlingmaier, M., J. Suhr, A. Ernst, Z. Wu, A. Keller, C. J. Strasburger, and A. Bergmann. 2009. High‐sensitivity chemiluminescence immunoassays for detection of growth hormone doping in sports. Clin Chem. 55(3):445–53.

About the Authors

Krista Fischer is a biostatistician working as senior researcher at the Estonian Genome Center, University of Tartu, Estonia, where she is leading a research group on statistical analysis of omics data. She also has done research on causal inference in clinical trials and is the president of the Nordic-Baltic Region of the International Biometric Society.

Donald Berry is a professor in the department of biostatistics at the University of Texas MD Anderson Cancer Center. He was founding chair of the department in 1999 and also founding head of the Division of Quantitative Sciences, including the department of bioinformatics and computational biology. He previously served on the faculties of the University of Minnesota and Duke University and held endowed positions at Duke and MD Anderson.