The Statistics of Genocide

It is fairly common for statisticians to be asked to serve as expert witnesses in civil and criminal court cases (see, for example, Statistics and the Law by DeGroot, Fienberg, and Kadane). However, it is still fairly rare for statisticians to testify in human rights cases. At the Human Rights Data Analysis Group (HRDAG), we consider testifying as expert witnesses to be one of the most visible and potentially influential outcomes of our work. We have found that quantitative work can often address key questions about patterns of violence and accountability in conflict settings and situations with mass violence.

HRDAG co-founder and director of research Patrick Ball has testified in five national and international human rights court cases, and statistician Daniel Guzmán has also contributed testimony in one case. Guzmán described his experience presenting our analyses of documents from the Historic Archive of the National Police in Guatemala in an article published in CHANCE in 2011.

This article describes another Guatemalan court case, this time addressing the question of whether General José Efraín Ríos Montt authorized and was responsible for acts of genocide against indigenous Maya Ixil during his tenure as de facto president in 1982–1983. To answer this question, statistical analyses must consider whether patterns of violence were consistent with targeting a specific ethnic group.

Historical Context

The period 1982–1983 was the most lethal in Guatemala’s 36-year civil war. The conflict between the Guatemalan government and various leftist rebel groups had been raging for more than 20 years. What began as a disagreement over land rights, widespread socioeconomic discrimination, and racism had devolved into a large-scale campaign of one-sided violence by the Guatemalan government against the civilian population.

General José Efraín Ríos Montt took power following a coup in March 1982. He implemented brutal counter-insurgency policies, including a “scorched earth” campaign that targeted Guatemala’s Mayan Ixil community and “pacification strategies” that explicitly targeted entire villages for annihilation. The Historical Clarification Commission (CEH, its acronym in Spanish), formed at the end of the war, estimated that there were more than 600 massacres during this two-year period.

Data Collection

Patrick Ball’s work in Guatemala began in 1995 when he helped the International Center for Human Rights Research in Guatemala (CIIDH, from the organization’s name in Spanish) design a database that organized information about human rights violations during the conflict from a variety of sources.

Throughout the conflict, many human rights groups were working to draw attention to violence perpetrated by the state. In 1993, several of these groups formed the National Human Rights Coordinating Committee, and in 1996, this collection of groups agreed to pool the information they had each gathered. At that time, the CIIDH had the experience and technical skills necessary to structure, analyze, and publish the collection of data that the member organizations had entrusted to it.

The heart of the CIIDH database is more than 6,000 testimonies. Some were collected by the contributing organizations, but the majority were collected directly by the CIIDH team. Between 1994 and 1996, the CIIDH formed regional teams to conduct interviews around the country, including in the Petén jungle, the Verapaces, and the western highlands (Huehuetenango, El Quiché, Sololá, Quetzaltenango, San Marcos, and Chimaltenango).

Interviewers used a standard, semi-structured protocol. This protocol ensured that teams conducted similar interviews and aided the accurate reporting of stories from victims and witnesses. Two-thirds of the interviews were conducted in witnesses’ primary Mayan language.

Since the CIIDH database included information from multiple sources, researchers soon discovered that the same violations could be described in multiple records. For example, multiple witnesses might report an event in separate interviews, or an incident could be described in both a news story and an interview. Further complicating the situation, the accounts were often not quite identical. One witness might recall that three of her neighbors were killed, while another might recall that there were four deaths. A press account might give a different number of victims or slightly different description of a location.

Although the CIIDH researchers could not foresee precisely how the data they were collecting might be used in the future, they did know that it would be important to be able to examine multiple layers of an individual case and to help identify repeated mentions of the same victim in different sources. They were careful to structure the database in a way that made this possible. This also meant that the database retained all information from all sources—never discarding anything, even if it was repeated in another source.

The database was also designed for each decision made by an analyst to be recorded. For example, whether and how an analyst determined that an individual described in one source was the same as an individual described in another source was recorded as “meta-data” (data about data and related decisions) along with the content of the records themselves, so a complete audit trail from the original sources to analyses of the databases could be conducted.

This careful attention to detail proved useful in the future, when researchers decided to use the data in ways the CIIDH team had not originally anticipated.

At the same time as the CIIDH team was gathering data on human rights violations, the United Nations was working to broker a peace accord between the Guatemalan government and the URNG (the umbrella organization of leftist rebel groups), officially ending the internal armed conflict.

This process took many years and resulted in multiple agreements. One of these, the Accord of Oslo, signed in 1994, specified the establishment of a Commission for Historical Clarification (CEH). The CEH was charged with clarifying “&hellipwith objectivity, equity and impartiality, the human rights violations and acts of violence connected with the armed confrontation that caused suffering among the Guatemalan people.” During the course of its work from 1997 to 1999, the CEH collected more than 11,000 interviews about the conflict. These interviews were conducted in the field, across the country. At the peak of its investigation, the CEH was managing 14 field offices. Ultimately, the CEH produced a 12-volume report, drawing on the individual testimonies as well as historical documents and other sources.

By 1997, Ball was advising both CIIDH and CEH on database design, structure, statistical analysis, and electronic security. He and his colleagues spent most of 1998 preparing two analytical reports: “State Violence in Guatemala, 1960–1996: A Quantitative Reflection” based on the CIIDH database, and the final section of the CEH report, based on an analysis of a combination of data from CEH, CIIDH, and a third human rights effort in Guatemala, the Catholic Church’s Recovery of Historical Memory (REMHI) project.

A project of the Human Rights Office of the Archbishop of Guatemala, REMHI was imagined by Bishop Juan Gerardi Conedera at the end of 1984, and implemented as a coordinated effort of 10 of Guatemala’s 11 dioceses in 1995. The goal of the REMHI project was to “arrange and describe [the history of the conflict] through the voices of the very victims who, after all, had the best knowledge of the truth.” REMHI conducted structured narrative interviews, and developed a database that was shared with the CEH.

The CEH debated whether and how their investigations should consider potential acts of genocide. Christian Tomuschat, chair of the commission, hosted a seminar and asked all of the jurists, historians, ethnographers, and scientific experts to present their best case for and against including an investigation of genocide in the CEH report.

During this seminar, Marcie Mersky, a field office leader and coordinator of the final report, asked Ball if statistical analyses could be used to address this question. Ball was confident it could, but he did not yet have clear which methodological approach would be best. After a conceptual discussion with CEH colleague Carlos Amezquita, Ball sought help from his colleague and mentor Fritz Scheuren, a statistician at the National Opinion Research Center at the University of Chicago.

The first task was analogous to the challenge Ball and his colleagues faced at CIIDH—how to identify multiple records, either within the same database or across different databases, that refer to the same individual victim? To answer this question, a set of records from CIIDH, CEH, and REMHI were randomly selected from each database. Researchers then compared each sampled record to all the sampled records in the two other databases (those other than the one from which it was selected) and decided whether any referred to the same event.

Although the choice of individual records was random, the sample was stratified by geographic region to ensure that records from the entire country were covered. This manual comparison produced estimated overlap rates—the proportion of records in each individual source that would be expected to also show up in one or both of the other sources. These rates were then applied to the entire list from each source to produce an estimated total number of overlapping records among the sources.

Once this work had begun, Scheuren realized that the information describing the number of cases in common across the projects was precisely the structure of data needed for a class of statistical methods that could estimate the total number of deaths that had occurred during the war, including the deaths that were never reported to any of the three groups.

Scheuren proposed to use a group of methods called multiple systems estimation (MSE). (Unfortunately, MSE is also the acronym for mean squared error, an important concept in statistics. In this essay, we only use MSE to refer to multiple systems estimation.) These methods had not been used in human rights investigations before, but they were common in a variety of other disciplines because of the way they combine information from different sources, potentially collected via different methods, and then estimate what is missing from all of the sources.

The output of these analytical methods is an estimate of the total population size. In this case, that means MSE could estimate the total number of victims—both those victims documented by one or more sources and those who have not yet been documented: those whose names and stores we do not yet know.

Methods

The first step in implementing MSE is to identify records from multiple sources that referred to the same individual victim. As described above, this was initially done by researchers manually reviewing records and making decisions. More recently, in computer science, a great deal of work has been done to enable algorithms to match records.

The process is referred to by a number of names, including record linkage, database de-duplication, and entity resolution. A subsequent update of the original analyses in Guatemala incorporated some of these automated approaches.

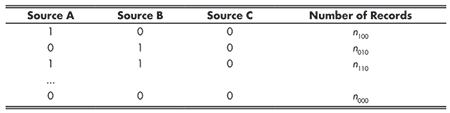

Once this identification process is complete, the information about which source(s) recorded that information is retained. Each record of a single victim then includes both the victim’s identifying information (name, date and location of their death, perhaps additional demographic information) and indicators of which source(s) documented that information. Such data can then be summarized as an incomplete contingency table, essentially counting the number of records with each documentation pattern (included in source A but not B, etc.), with the estimate of interest being the final line, the number of records that were not documented by any source (n000—see example in Figure 1).

Data structured in this way are then ready for the broad class of methods called MSE. It is important to keep in mind that “MSE” includes a number of different specific methods, just as “regression” includes linear and logistic (among many other types of) regression.

HRDAG did two different analyses of mortality in Guatemala. Both covered the same time period, but the analyses were precipitated by events more than a decade apart: The first was reported to the CEH in 2000, and the second was presented as expert witness testimony by Ball in 2013 in the prosecution of General José Efraín Ríos Montt.

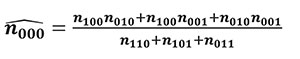

At the time of HRDAG’s initial MSE analysis, researchers used the form of MSE with which they were familiar: a formula outlined in a 1974 text by Marks, Seltzer, and Krótki, specifically equation 7.118 (with a slight modification in notation):

where in this case,

n000 = the number of victims who were not reported to any of the three projects—to CEH, CIIDH, or REMHI

n111 = the number of victims who were reported to all three projects

n110 = the number of victims who were reported to CEH and CIIDH but not to REMHI

n101 = the number of victims who were reported to CEH and to REMHI, but not to CIIDH

n011 = the number of victims who were reported to CIIDH and to REMHI, but not to CEH

n100 = the number of victims who were reported only to CEH and not to CIIDH, nor to REMHI

n010 = the number of victims who were reported only to CIIDH and not to CEH nor to REMHI

n001 = the number of victims who were reported only to REMHI and not to the CEH nor to the CIIDH

Equation 1 allowed them to use all three data sources, with a small adjustment for the possibility that the data sources were not fully independent (i.e., if a victim was reported to one source, he or she might be more or less likely to also be reported to one of the other sources). However, this method did not formally model or estimate any potential relationships between the sources.

Statistical Patterns Consistent with Genocide

The statistical estimates calculated using the method described in the previous section ultimately formed a central piece of the CEH’s finding that approximately 200,000 people were killed and “disappeared” during the 36-year conflict.

Even more importantly, Ball made a second set of estimates comparing the deaths suffered by members of the Mayan population relative to the deaths suffered by non-Mayans in the same regions. This was the key question regarding whether patterns of violence were consistent with arguments that genocide occurred during the conflict.

Specifically, they combined estimates of the total number of deaths for these two groups with census estimates of the number of people alive in each group in those regions, and thus were able to estimate homicide rates for the two communities.

The results were published in the CEH’s report in the final chapter of Volume XII. They found that members of the Mayan community suffered homicide rates between five and seven times higher than non-Mayans. This stark difference provided the basis for the CEH’s conclusion “… that agents of the State of Guatemala, within the framework of counterinsurgency operations carried out between 1981 and 1983, committed acts of genocide against groups of Mayan people which lived in the four regions analysed [including Quiché].”

It was crucial that these comparisons were conducted using MSE, which included an estimate of the number of unknown, unobserved victims. Otherwise, this comparison would have conflated a potential difference in reports of victims with actual numbers of victims.

For a variety of reasons, all three sources used in this analysis covered different proportions of the total population of victims. For example, in some of the regions under study, the sources may have documented a larger proportion of the total number of Mayan victims, and a smaller proportion of the total number of non-Mayan victims (perhaps because members of the Mayan community were more aware of and invested in these documentation efforts). It would be insufficient to conclude that more Mayans were killed than non-Mayans based solely on these reported numbers of victims.

To draw this conclusion in a statistically rigorous way requires methods, like MSE, that adjust for this potential imbalance in coverage by estimating what is missing from each source. MSE methods are not the only approach to this problem—surveys and other methods of random sampling can also be used to address these challenges. However, these approaches are frequently expensive and time-consuming, while MSE methods have the advantage of relying on data that may already exist, having been collected for other purposes.

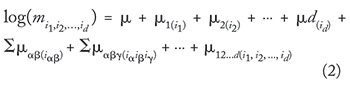

Shortly after completing the analysis for the CEH, Ball and his team at HRDAG learned about the foundational MSE work published in a textbook by Bishop, Fienberg, and Holland, which guided HRDAG’s application of MSE methods for years to come. In particular, Chapter 6 laid out how to use loglinear models to calculate the same estimate described above—the number of victims who were not reported to any of the existing sources.

Most importantly, this class of models provides a way to formally estimate potential correlations between the lists. Equation 2 shows the general approach, using the Bishop, Fienberg, and Holland notation, for d lists:

where mi1,i2,…,id is the expected number of individuals with list intersection {ii, i2, …, id} (i.e., ij = 1 if a victim is recorded on list j), and the μ terms are the various coefficients for the main effects and possible interactions among lists.

In 2011, Ball and colleagues were invited by then-Attorney General Claudia Paz y Paz to update and expand their earlier analysis for the CEH. This updated analysis included numerous features that strengthened and confirmed the findings from the CEH report.

First, they added a fourth data source, the National Reparation Program, to the analyses. Second, they updated the record linkage piece of analyses, from the manual review conducted in the 1990s to a formal supervised approach combining automated linkage with human review. Third, as described above, they updated the MSE modeling approach, in particular to rely on weaker (and more-plausible) assumptions about the relationships between sources.

Lastly, they included two important sensitivity analyses in the results presented at trial. One of the questions frequently asked about MSE analyses using data collected for other purposes is “How do you know the data are accurate and not incorrect or potentially fabricated in some way?” The challenging answer is that in general, we do not know for sure that all of the data used are accurate and error-free—but we can use sensitivity analyses to test the impact of including potentially incorrect data on substantive conclusions.

In this case, Ball considered what would happen if between 0% and 50% of the records documented by just one source were incorrect, and thus dropped from analyses. The conclusion was that the estimated difference in risk of homicide for members of the Mayan population remained substantially higher than the risk for the non-Mayan population even when up to half the records from a single source were dropped from analyses. In other words, the conclusion presented at trial would be unchanged even if many of the records were fabricated.

The second set of sensitivity analyses related to the census data used to calculate the relative risks. Specifically, these analyses considered the possibility of under-registration of the Mayan population in the 1981 census. Again, sensitivity analyses with between 0% and 20% under-registration rates for the Mayan population still resulted in a substantial difference in relative risk of homicide. It should also be noted that a 20% under-registration rate is considerably higher than rates demographers would expect based on analyses of previous censuses.

Court Case and Trial

Perhaps not surprisingly, much like the slow development of the data and methods described in previous sections, the case itself evolved over decades, thanks to the tireless work of many individuals and organizations. These are described in more detail in a forthcoming book chapter.

One key development was the appointment of Claudia Paz y Paz as Guatemala’s attorney general in December 2010. During her tenure, there was substantial progress in the fight against impunity for both past and current crimes.

One of the key challenges for the legal team in this case was to prove that Ríos Montt was responsible for acts of genocide committed by the army. That is, prosecutors had to show not only that all the terrible acts of violence being described by individual witnesses occurred, but that they met the specific legal requirements for genocide. Paz y Paz was intimately familiar with these arguments because she led the analysis for the CEH report more than a decade earlier.

These arguments, plus the statistical analyses introduced by Ball, were key to making this link. The dramatic difference in rates of violence suffered by Mayan as compared to non-Mayan community members was consistent with the argument that the violence was targeted against the Ixil-Mayan people. Adding to the strength of the original findings included in the CEH report, the addition of a new data source and the use of two updated methods produced substantively similar analytical conclusions: Members of the Mayan community in the Ixil region in the early 1980s were between five and eight times more likely to be killed by the Army than non-Mayans.

Ball testified on April 12, 2013, spending two hours on the stand presenting his updated analyses. He was one of several expert witnesses, all of whom introduced key findings and evidence that acts of genocide did occur in the Ixil region in the early 1980s. This pattern is common. Statistical evidence is rarely used on its own; rather, statistical analyses serve to support (or argue against) charges based on multiple lines of investigation.

On May 10, 2013 Judges Barrios, Bustamante, and Xitumul found General Ríos Montt guilty of crimes against humanity and genocide against indigenous Maya Ixil and sentenced him to 80 years in prison. The fact that this verdict was handed down in the local courts of Guatemala makes Ríos Montt’s case particularly noteworthy: This was the first time that a former head of state was found guilty of genocide by his own national court system. In their 718-page written opinion, the judges called out Ball’s testimony as an important component of the case:

[Ball’s] expert report provides evidentiary support for the following reasons: a) It shows in statistical form that from April 1982 to July 1983, the army killed 5.5% of the indigenous people in the Ixil area. b) It confirms, in numerical form, what the victims said. c) It explains thoroughly the equation, analysis, and the procedure used to obtain the indicated result. d) The report establishes that the greatest number of indigenous deaths occurred during the period April 1982 to July 1983 when José Efraín Ríos Montt governed. e) The expert is a person with extensive experience in statistics.

—Translation by Patrick Ball

Epilogue

Unfortunately, this landmark was not to stand. On May 20, 2013, Guatemala’s Constitutional Court threw out the genocide conviction and prison sentence, citing procedural errors unrelated to any of the testimony, and said the trial had to re-start. General Ríos Montt was sent home under house arrest.

The following year, in May 2014, Attorney General Claudia Paz y Paz was forced to leave office by a Constitutional Court ruling. Typically, attorneys general serve four-year terms in Guatemala. However, Paz y Paz’s term ended after only three-and-a-half years, due to the court’s conclusion that she was completing the term of her predecessor, not serving her own full four-year term.

In July 2015, Guatemala’s forensic authority declared General Ríos Montt mentally unfit to stand trial; the following month, a Guatemalan court ruled that he could stand trial but could not be sentenced, since he is suffering from dementia. The trial was set to restart in January 2016, but was suspended again to resolve outstanding legal petitions.

The general was 86 when he was convicted. He is now 90. At the time of this writing, he has been returned to house arrest, where he has been since 2012, and is awaiting a new trial. Unfortunately, it is unclear whether that new trial will occur.

This, and other cases continue to wind their way through the Guatemalan justice system. As recently as February 2017, a different set of judges heard an argument to try Ríos Montt for the 1982 Dos Erres massacre, an incident that was not included in the previous genocide charges.

In the meantime, many of the key witnesses from the genocide case remain ready and willing to testify again, even if they also remain skeptical that the trial will move forward.

Further Reading

For further information about the CIIDH database, see HRDAG’s project page.

The CEH report (PDF download).

For more information about the data collection projects and analyses described here, see Chapters 6 and 11 of “Making the Case.”

For more methodological detail, “Multiple Systems Estimation: The Basics.” Further technical detail regarding how HRDAG implements these methods can be found in “Computing Multiple Systems Estimation in R.”

For a history of the two-system estimator, see Goudie, I.B.J. and Goudie, M., 2007. Who captures the marks for the Petersen estimator? Journal of the Royal Statistical Society: A.

For overviews of record linkage, see Scheuren, Herzog, and Winkler, Data Quality and Record Linkage Techniques. 2007. New York, NY: Springer Science+ Business Media LLC, and Christen, P. 2012. Data Matching: Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection. Berlin-Heidelberg: Springer-Verlag.

About the Authors

Patrick Ball is director of research at the Human Rights Data Analysis Group and has more than 25 years of experience in conducting quantitative analysis for truth commissions, non-governmental organizations, international criminal tribunals, and United Nations missions in more than 30 countries.

Megan Price is executive director of the Human Rights Data Analysis Group, where she designs strategies and methods for statistical analysis of human rights data for projects in a variety of locations, including Guatemala, Colombia, and Syria. She is currently finishing a book on statistics and human rights (with Robin Mejia) that is due out from Taylor &Francis later this year.