Human Rights and Mixed Methods

Research in the field of human rights can have many goals. Among others, it serves to uncover and document evidence of crimes, seeks to tell the stories of victims and survivors, and strives to inform and influence policies and strategies to deal with human rights violations. Most commonly, it is defined as seeking to uncover “the truth”—what happened, to whom, by whom, where, when, and how, and how many were affected.

Human rights practitioners and researchers deploy a range of methods to answer these questions. The method of enquiry is often dictated by practical realities such as the experience and expertise of the human rights researcher or activist (e.g., disciplinary training and background), time, funding, and other resources. There is, however, a general perception that research in the field lacks methodological rigor (Coomans, Gundfield, and Kamming, 2010).

When a clear methodological approach is selected, the preferred approach is qualitative in nature, including semi-structured interviews, case studies, and ethnographic methods. Such methods are valuable in providing in-depth information about what happened to whom, how, and why. They are less effective in assessing the magnitude and patterns of violations, or quantifying population perceptions and needs, which are increasingly important; for example, in examining the widespread nature of mass atrocities or systematic social and economic rights violations, and understanding how affected populations want to address the harm they suffered.

In recent decades, pioneers like Clyde Snow and Patrick Ball have demonstrated the importance and usefulness of quantitative methods in documenting human rights. It is with a similar interest that we entered the field of human rights 15 years ago. Specifically, our goals were to improve methodological and measurement practices, and to engage with communities on critical human rights questions through social science methods.

As our experience in conducting over 30 studies globally on mass atrocities has taught us, mixed methods are particularly well adapted to human rights research. This article outlines the mixed-methods approach we have developed and deployed to examine the impact of mass atrocity on populations, and their perceptions and attitudes about justice and human rights. We explore the development and formalization of a mixed methods approach and multi-level analysis, and discuss emerging methodologies and opportunities from the information and mobile technology revolutions.

Mixed Methods and its Evolution into Mainstream Research

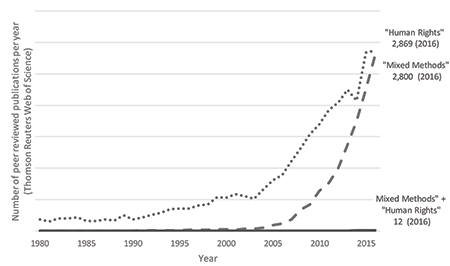

An analysis of abstracts of peer-reviewed publications in Web of Science-indexed journals shows a relatively rapid growth of papers referencing “human rights,” and an even steeper growth of papers referencing “mixed methods” over the last decade. By 2016, there were roughly equal numbers of papers in indexed journals with abstracts referencing either “human rights” or “mixed methods.” Few papers, however, referenced both “human rights” and “mixed methods”: 12 in total, or less than 0.5% of all human rights papers. In part, this reflects a lack of methodological transparency in many human rights papers, but it also highlights how rarely human rights scholars have adopted mixed methods.

John Creswell defines mixed-method research as a methodology for conducting research that involves collecting, analyzing, and integrating (or mixing) quantitative and qualitative research (and data) in a single study or a longitudinal program of inquiry (Creswell, 2003). Mixed methods involve implementation of qualitative and quantitative methods sequentially (either qualitative or quantitative occurs one after the other), concurrently (both methods employed in parallel), or integrated (one method predominates and the other is integrated within it).

According to Johnson, Onwuegbuzie, and Turner (2007), mixed-methods research is a synthesis of qualitative and quantitative; a third methodological or research paradigm (qualitative and quantitative research being the first two). It is said to provide the most-informative, complete, balanced, and useful research results while recognizing the values of traditional quantitative and qualitative research.

Mixed methods have emerged in recent decades as a best practice approach to conducting social science research. Explicitly combining both qualitative and quantitative approaches into a single form of research inquiry helps overcome the limitations of a single design. The characteristic arguments are that qualitative methods lack representativeness and quantitative methods lack depth.

Mixing both approaches seems intuitive. However, the evolution of mainstream research approaches took several centuries, rooted in debates about singular or universal truth (Plato and Socrates) versus multiple or relative truth (Protagoras and Gorgias) versus balances or mixtures (Aristotle, Cicero, Sextus Empiricus). Academic training and ways of doing things across disciplines are often rooted in one method or the other; rarely both.

The emergence of mixed methods research is less philosophical, but rather emerged from the need to provide a practical, in-depth, but also quantifiable understanding of social issues with direct practical outcomes in shaping programs and policies.

“Our” Mixed Methods

Understanding what people want in terms of justice and reconstruction after mass atrocities and other violations of human rights is a challenging endeavor. It requires a systematic, inclusive, and representative approach so all voices are adequately heard and represented, and violations are documented reliably. At the same time, it requires a contextualized and in-depth approach to understanding complex processes.

Mixed methods are particularly well adapted to meet these requirements. Our research team has used a sequential exploratory approach for over a decade. This approach uses a qualitative data collection phase to narrow down our research scope and develop our quantitative research instrument, followed by implementation of quantitative data collection.

For our quantitative questionnaire, we developed an embedded qualitative design by including open-ended questions. To make data entry and analysis easier, we add a list of common responses gathered through the pilot for the interviewer to select, but with clear instructions to the interviewer not to read the responses. When the interviewer is uncertain whether a response falls under one of the listed responses or the response is other than what is listed, they can enter the response as phrased by the respondent.

This, however, is something we only learned over time. Charged with doing an assessment of attitudes about reconstruction in Iraq in 2003, we used a largely qualitative approach to gather in-depth information on experiences and perspectives among a range of stakeholders. We organized focus groups along key socio-demographic characteristics (age, gender, religion, geographical, and group affiliation).

The result was a huge amount of data that required a year to encode, translate, and render usable for program and policy insights; in other words, too late for any practical outcome. It yielded in-depth insights into each group’s human rights experiences under the Saddam Hussein regime and their desires and perceptions; however, because the interviewees were chosen purposively for their views and were not randomly sampled from the population, we could not analyze how often specific opinions prevailed or demonstrate differences between groups.

Two years later, we became involved in a study to examine perception and attitudes about justice in northern Uganda. President Museveni had referred the conflict with the Lord’s Resistance Army in northern Uganda to the International Criminal Court’s (ICC). The move resulted in a major debate about what mechanism, if any, was best to deal with the atrocities, pitting local justice versus international justice. It also revived a peace-versus-justice debate and raised the question of the acceptability of amnesties for the sake of peace.

At the time, several researchers had conducted valuable qualitative studies of the factors influencing peace and justice considerations in the north, primarily comprising interviews with Ugandan government officials, humanitarian workers, traditional and religious leaders, former LRA members, and others. What was lacking, however, was a sense of the relative frequency of various opinions among the population.

To fill that gap, we developed a quantitative survey, adapting population-based sampling to measuring opinions and attitudes about specific transitional justice mechanisms, including trials, traditional justice, truth commissions, and reparations. However, to build a contextually relevant instrument that adequately reflected the depth and complexity of the issues and cultural nuances, we first undertook a qualitative study (key informant interviews) to help us understand the context. The key informant interviews helped us design our research instrument and research protocol such as where specifically to sample, which sampling design to deploy, and the sample size calculation.

This study used quantitative sampling and assessment approaches, although it included many open-ended questions with common responses listed, but not read to the interviewees, and space for verbatim recording of responses when needed. These qualitative responses also helped us to interpret the results of the survey.

More recently, we have used a multi-level analysis, with a multidisciplinary group of colleagues, as a form of mixed methods to assess the effectiveness of the Colombia reparation program for victims (Pham, el al., 2016). The convergent design combined a micro-level data set on population perceptions of the Colombian reparations program with a meso level of analysis, which collected qualitative data at the organizational level on the Unidad para las Victimas (Victims Unit; VU), the organization in charge of implementing the reparations program and overseeing the domestic database of victims registered in the reparations program; and a macro-level analysis enabled by global data sets of transitional justice mechanisms; in this case, the reparations data gathered by the Transitional Justice Research Collaborative.

These three components were conducted in parallel over a 10-month period in 2014–2015. Macro-level analyses focused primarily on the formal and legal characteristics of the Colombian reparations programs in comparison with others around the world; it did not result in an understanding of how the reparations program in Colombia was actually being implemented.

While the meso-level analyses provided an incredible wealth of detailed information about the institutional framework, the organizational charts and flow charts describing the formal processes did not always capture the nature of how power and influence worked in the Colombian bureaucracy. It did begin to reveal the political issues and economic constraints the VU faced in trying to carry out its mandate.

The micro-analyses complemented the first two by providing insight on the beneficiaries’ and the general population’s perceptions about the reparation programs and how these have affected them from a self-reported perspective, according to a variety of complex benchmarks: social cohesion, attitudes toward the state, moral and physical wellbeing, and socioeconomic stabilization. Taken together, the evaluation results provided invaluable insights and information to the government and the Victims Unit on how to revise their program activities to achieve greater efficiency and efficacy, as well as achieve greater impact on alleviating physical and psychological harm.

The whole idea of using mixed methods may seem obvious in retrospect, but at the time of our initial research, perception surveys about peace, justice, and broadly human rights were still a somewhat-uncommon proposition. It created an opportunity for us to develop an approach that highlighted the importance of contextualization and localization for developing instruments that combined quantitative and qualitative elements. The result—more than a decade later—is a process of identifying dimensions, domains of measurement, and ultimately specific measures that uses an interdisciplinary, participatory approach informed by both theoretical frameworks and experience.

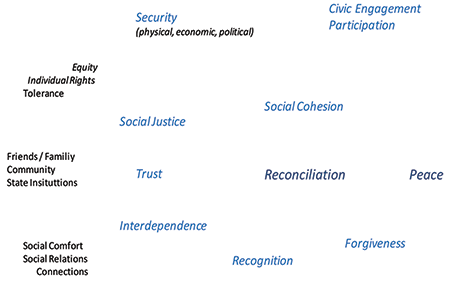

The relevant measures and manifestations of individuals’ perceptions and attitudes differ between countries, reflecting cultural and contextual differences, especially in relation to the nature and dynamics of conflicts and post-conflict reconstruction; in other words, how we measure complex phenomena. For example, the concept of “reconciliation” must almost by definition be context-specific, with measures built on local understandings. Reconciliation in Rwanda—how it is defined and measured—may look very different from how it would in northern Uganda or Timor-Leste.

This is not to say that global standardized measures are not possible, but they may not capture important nuances across contexts. This matters because our work is used locally. While global indexes can be important for comparative analyses, our goal is to conduct research that offers insights to the communities where we work, to aid the post-conflict recovery processes as they occur in each context.

Reconciliation is, in fact, a common but challenging theme across our research. The various countries are very different in terms of the socio-economic and political environment (both before and after mass violence), origin and nature of the violence, and resulting trauma and effects on relationships among actors. Thus, the meaning of (and means for) reconciliation itself varies.

For example, in Uganda in 2005, 90% of all respondents said that there was a need for reconciliation, but specific understandings of reconciliation varied from district to district in the North. Respondents from the non-Acholi district were more likely to associate reconciliation with forgiveness than those from the Acholi districts (62 percent versus 43 percent, respectively). In Soroti, a non-Acholi district, 35% defined justice in terms of reconciliation, while in the two Acholi districts of Gulu and Kitgum, only 12 percent and 3 percent, respectively, used a similar definition (Pham, et al., 2005).

These findings were relevant because, in the controversy about justice versus peace, some observers had promoted the idea that Acholis preferred forgiveness over prosecution of LRA leaders. In Cambodia, we were able to capture how the population’s opinions of justice and reconciliation evolved through two studies in 2008 and 2010.

We found that respondents defined reconciliation slightly differently in 2010 compared to 2008. In 2008, a majority of the population defined reconciliation as the absence of violence and conflict (56%); however, only 15% did so in 2010. In the 2010 survey, a majority (54%) characterized reconciliation as unity and living together, and more mentioned communicating and understanding each other (38%), and compassion for each other (27%) as reconciliation. But while understanding of reconciliation changed, the surveys found that levels of comfort in interacting with members of the former Khmer Rouge regime, in various day-to-day social settings, actually changed very little between 2008 and 2010 (Pham, et al., 2011).

Given how frequently we see these variations, we have not developed a formal definition of reconciliation, but rather, somewhat informally mapped out relating concepts that help us work with partners to develop locally relevant research instruments and working definitions.

The result has allowed us to measure various dimensions of reconciliation. In this effort, we conceive reconciliation as a process rather than a set of attributes. What this process looks like is shaped by the context in which reconciliation takes place, challenging the notion that a standardized, quantitative, unified measurement is possible. It emphasizes instead a mixed-method approach to understanding reconciliation.

Reconciliation may seem straightforward to define and measure in contexts of inter-ethnic violence, but our research results challenge the usefulness of any such simplistic construct: data from Eastern Democratic Republic of the Congo (DRC) and Burundi suggest significant improvement over time in the level of trust and inter-connectedness between communities, yet whether communities are “reconciled” is difficult to argue, and some level and/or risk of violence between communities continues to exist.

The key lesson we learned across countries was that we could not identify a definitive list of indicators of reconciliation structured for quantitative measurement across contexts. Rather, we needed to define and select indicators for each context and include elements that are less easily quantifiable. Our ability to do so depended on the mixed-methods approach, and was greatly helped by advances in technology.

Digital Tools Advancement

Digital tools, and mobile devices especially, are well adapted for mixed-methods and survey-like applications. We first adopted the idea of encoding data using mobile devices directly in the field in 2003. Digitizing piles of papers and recorded interviews for data analysis taught us the challenges of transcription, encoding, and translation, which can delay the publication of results by a year or more. From that point on, we spent the resources we had previously devoted to photocopies and data entry on hiring programmers instead, which ultimately led to KoBoToolbox—a free and open-source digital data collection tool that has now been widely accepted by the humanitarian community, as well as human rights and academic researchers and practitioners.

KoBoToolbox and other similar tools can be used as an alternative to paper-based data gathering. This process of mobile data collection works like traditional surveys, but data entry takes place directly in the field during the interview, on a mobile device. Mobile data collection greatly enhances the quality of data (e.g., automated quality control, skipping pattern …) and accelerates the aggregation and usability of data. Importantly, the integration of audio recording, video recording, camera, and other capabilities within the same tool enhances the possibility of mixing methods.

For example, open-ended questions such as “What does peace mean to you?” or “What should be done for victims affected by the LRA?” traditionally are reserved for qualitative research for two major reasons: It is difficult to input and analyze such a volume of text data in a survey form involving interviews with hundreds or thousands of individuals, and it often requires more probing or follow-up questions to understand better the response provided.

With KoBoToolbox, researchers have the ability to embed an audio question within the questionnaire, encode complex skip patterns, and assign qualitative questions to a select sub-sample of respondents. This helps greatly to overcome the limitations of traditional approaches.

For example, a sub-sample of respondents may be asked to explain in depth the response they provided using an open-ended follow-up question. The questionnaire can also include questions that capture images or videos, for example, to capture community maps or body maps (a type of participatory method used when exploring the effects of sexual violence). Records from interviews of parents and children can easily be linked.

All of this information is collected in a unified manner (one device, various data streams uniquely linked), which makes mixed methods a more doable proposition than handling multiple devices. These possibilities will continue to expand as wearable technologies, sensors, and the Internet of Things continue to evolve and diffuse. This new ecosystem of data, however, is still in its intellectual and operational infancy. Most applications in human rights remain anecdotal or relatively informal, but based on the emerging trends, new data show real value and potential as a force for human rights investigation and research.

Conclusion

The last two decades have seen an expansion of methods and data sources available to document, investigate, and research human rights. Rather than arguing about which method is best, our experience taught us the value and importance of mixing methods, either in sequence or simultaneously, and localizing research to each context. By producing data both in text and numeric forms, mixed-methods research renders an accurate and in-depth portrayal of human rights violations and their consequences.

This approach provides the quantitative determination of the magnitude and how widespread and systematic it is and type of triangulation needed to obtain sound evidence across disciplines and expertise. Our work has emphasized quantitative methods and large-scale surveys because it was an important gap, but it would have lacked depth and relevance had the quantitative work and its interpretation not been shaped by qualitative enquiries.

What we see is that human rights researchers already adopt mixed methods intuitively, but they do not formalize this approach and rigorously document their process. Furthermore, many aspects of mixed-methods research in human rights remain under-explored, including detailed modalities, challenges, and best practices. We believe that the mixed-methods approach should be embraced more systematically and deliberately by human rights researchers. This will address the perception of a lack of methodological rigor often associated with scholarship in this field and avoid a rush toward decontextualized measures for the sake of providing “numbers.”

Further Readings

Caracelli, V.J., and Greene, J.C. 1993. Data Analysis Strategies for Mixed-Method Evaluation Designs . Educational Evaluation and Policy Analysis 15:2; pp. 195–207.

Coomans, F., Grünfeld, F., and Kamminga, M.T. 2010. Methods of Human Rights Research: A Primer. Human Rights Quarterly 32(1); pp. 179–186. Baltimore, MD: Johns Hopkins University Press.

Creswell, J.W. 2003. Research design: Quantitative, qualitative, and mixed methods approaches (2nd ed.). Thousand Oaks, CA: Sage.

Johnson, R.B., Onwuegbuzie, A.J., and Turner, L.A. 2007. Towards a Definition of Mixed Methods Research. Journal of Mixed Methods Research 1; p. 112.

Pham, P., Vinck, P., Marchesi, B., Johnson, D., Dixon, P.J., and Sikkink, K. 2016. Evaluating Transitional Justice: The Role of Multi-Level Mixed Methods Datasets and the Colombia Reparation Program for War Victims. Transitional Justice Review 1:4.

Pham, P., Vinck, P., and Stover, E. 2005. Forgotten Voices: A Population-Based Survey of Attitudes About Peace and Justice in Northern Uganda. Berkeley, CA: International Center for Transitional Justice and the Human Rights Center/University of California, Berkeley.

Pham, P., Vinck, P., Balthazard, M., and Hean, S. 2011. After the First Trial: A Population-Based Survey on Knowledge and Perception of Justice and the Extraordinary Chambers in the Courts of Cambodia. Berkeley, CA: Human Rights Center/University of California, Berkeley.

About the Authors

Phuong Pham, PhD, MPH is an assistant professor at the Harvard Medical School and Harvard T.H. Chan School of Public Health and director of evaluation and implementation science at the Harvard Humanitarian Initiative (HHI). She co-founded Peacebuildingdata.org, a portal of peacebuilding, human rights, and justice indicators, and KoboToolbox, a suite of software for digital data collection and visualization.

Patrick Vinck, PhD is the research director of the Harvard Humanitarian Initiative; an assistant professor at the Harvard Medical School and Harvard T.H. Chan School of Public Health; and lead investigator at the Brigham and Women’s Hospital. His current research examines resilience, peacebuilding, and social cohesion in contexts of mass violence, conflicts, and natural disasters. He is also the co-founder and director of KoBoToolbox, a data collection service, and the Data-Pop Alliance, a Big Data partnership with MIT and ODI. Vinck is also a regular advisor and evaluation consultant to the United Nations.