Statisticians and Forensic Science: A Perfect Match

News stories about the release of wrongly imprisoned people after years of incarceration have helped shine a spotlight on the need to strengthen the scientific foundation of many forensic science disciplines. Statisticians also have helped to illuminate the problem and are seen as a vital part of the solution. This issue of CHANCE explores the role of statisticians in forensic science reform and describe recent research efforts statisticians have undertaken.

This introduction briefly reviews the history leading to recent federal efforts that include establishment of a National Forensic Science Commission (NCFS) and the National Institute of Standards and Technology (NIST) Organization of Scientific Area Committees (OSAC), and the many ways statisticians are engaged in those organizations.

Statisticians have been interested in forensic and courtroom evidence since the early 19th century. For example, in Europe, Adolphe Quetelet (1796–1874) and Francis Galton (1822–1911) both set forward the notion that fingerprints are unique (although the collection of measurements on them may not be).

As explained by Stigler in 1999, Galton was especially influential in identifying criminals by developing a system of classification of features on the print (types of arches, whorls, and loops). Mathematician Henri Poincaré (with two other French Academicians) submitted a report in the second appeal of the Dreyfus case (1904), criticizing the assumptions made by Alphonse Bertillon in his handwriting analysis of a document reportedly written by Dreyfus (accessed July 19, 2015).

In the United States, Benjamin Pierce and his son Charles both testified in a handwriting analysis case involving the disputed signature on an apparent codicil to the will of Hetty Green’s aunt, Sylvia Ann Howland (see Meier and Zabell 1980 and Slack 2005 in Further Reading).

Despite these notorious uses of probability and statistics in cases involving forensic evidence, the widespread involvement of statistics in forensic science generally remained limited until the use of population genetics and likelihood ratios in assessing the strength of a “match” between DNA found in evidence at a crime scene and that of a suspect. (Issues of mixtures of DNA found in crime scene evidence, rarity of profiles, and measurement of peaks leading to allelic drop-in/drop-out continue to be investigated; see, for example, Weir 2007.)

While there were earlier reports identifying problems with the scientific underpinning of forensic science disciplines (see below), it was the 2009 National Academies report, Strengthening Forensic Science in the United States: A Path Forward, that elevated the issue to a national cause. The prestige of the Academies and the panel members, the breadth of the report, and the pointed critique of forensic science disciplines all combined to make the report a catalyst for forensic science reform. The executive summary included the startling pronouncement, “With the exception of DNA analysis, however, no forensic method has been rigorously shown to have the capacity to consistently, and with a high degree of certainty, demonstrate a connection between evidence and a specific individual or source.”

The Strengthening Forensic Science report also helped to signal the importance of statisticians to forensic science reform by including two statisticians among its 17 panel members: Constantine Gatsonis (co-chair) and Karen Kafadar.

The Organization of Scientific Area Committees (OSAC) is part of an initiative by the National Institute of Standards and Technology (NIST) and the Department of Justice (DoJ) to strengthen forensic science in the United States. The organization is a collaborative body of more than 500 forensic science practitioners and other experts who represent local, state, and federal agencies; academia; and industry. (To read more about OSAC, visit the NIST forensics website.)

Earlier Insights

Four National Academy reports dealing with specific forms of forensic evidence preceded the Strengthening Forensic Science report. The statistical analyses in the first National Academies DNA report, DNA Technology in Forensic Science (1992), were updated in The Evaluation of DNA Evidence (1996); with panel members David Siegmund and Stephen Stigler, this second report addressed statistical issues and interpretation of DNA analyses more carefully.

Polygraph and Lie Detection (2003), whose panel included Stephen Fienberg (chair), concluded that trustworthy studies of polygraph accuracy were extremely limited and highly specific to particular incidents, and therefore not generalizable.

Forensic Analysis Weighing Bullet Lead Evidence (2004), whose authoring panel included Karen Kafadar and Clifford Spiegelman, highlighted the inappropriate statistical procedures being used by the FBI to compare seven-element chemical trace signatures in the lead of bullets found at a crime scene versus those in the suspect’s possession. (The FBI discontinued the practice 18 months later, in September 2005.)

Ballistic Imaging (2008), whose co-authors included statisticians John E. Rolph (chair), Alicia Carriquiry, David L. Donoho, William F. Eddy, Vijay Nair, and Daryl Pregibon, conducted careful statistical analyses of existing ballistic image data, which led to the recommendation against the construction of a national reference database.

All of these reports highlighted the value of statistics in their conclusions, but it was the broad, encompassing nature of the 2009 report that really took the forensic science field by storm.

Congress took notice of the Strengthening Forensic Science report and the attention it was getting in the criminal justice and forensic science communities. Senator Patrick Leahy (D-VT), then-chair of the Senate Judiciary Committee, circulated a draft bill to act on the Strengthening Forensic Science report recommendations in early 2010 and introduced S. 132, the Criminal Justice and Forensic Science Reform Act, in early 2011. Senator Jay Rockefeller (D-WV), then-chair of the Senate Commerce, Science, and Transportation Committee, introduced a more science-centric bill in 2012, S. 3378, the Forensic Science and Standards Act of 2012. Soon Congresswoman Eddie Bernice Johnson (D-TX) introduced a companion bill in the House.

The attention brought to bear by the Leahy, Rockefeller, and Johnson bills and the accompanying hearings further helped bring attention to the need of forensic science reform.

Concurrent with Congress’s forensic science reform deliberations, the Obama administration was also working on forensic science reform. An administration-wide working group organized by the White House Office of Science and Technology Policy (OSTP) helped inform major undertakings by NIST and the Department of Justice (DoJ).

NCFS was established in early 2014 to enhance the practice and improve the reliability of forensic science. Led jointly by NIST and DoJ, the commission has 36 members, including statistician Fienberg (Kafadar is also a member of the NCFS Scientific Inquiry and Research Subcommittee). Commissioners frequently brought up the need to engage statisticians in forensic science reform in the early NCFS meetings.

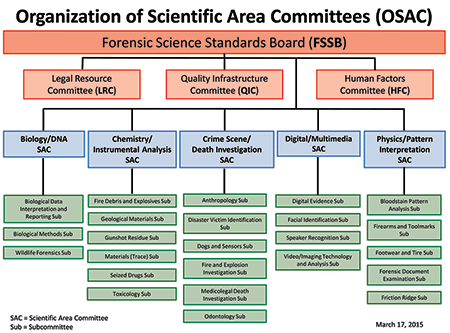

NIST also overhauled the many so-called Scientific Working Groups (SWGs), replacing them with the OSAC. The SWGs consisted of scientific subject-matter experts for 20 forensic science disciplines, but their activities were not coordinated in any way and the levels of activity and standards varied widely. OSAC was established to coordinate the activities and standards across disciplines and is overseen by a Forensic Science Standards Board (FSSB). It has five scientific area committees (SACs), 24 subcommittees, and three resources committees.

In NCFS’s discussion of the development of OSAC, several commissioners urged each OSAC unit to include a statistician, which seemed to become an unwritten goal. Indeed, there are statisticians on the FSSB, four of the five SACs, and a majority of the subcommittees.

Even the Supreme Court took quick notice of the Strengthening Forensic Science report, with Justice Antonin Scalia citing the report in his opinion on Melendez-Diaz v. Massachusetts, 129 S.Ct. 2527, 2536 (2009) only four months after the release of the report, since the report questioned the reliability of forensic evidence.

Shortly after the release of the Strengthening Forensic Science report, the American Statistical Association (ASA) board of directors issued a statement endorsing the report’s recommendations and highlighting the role of statisticians in forensic science reform. At the urging of its members most active in forensic science research, the ASA also created the ASA Ad Hoc Committee on Forensic Science. The committee played an active role in meeting with congressional committees to reinforce the importance of the 2009 report’s recommendations, support enacting legislation to develop a structure to execute the Strengthening Forensic Science report recommendations, and urge independent oversight of the reform.

In 2012, the committee turned its attention toward the administration, interacting with representatives of the various agencies involved with forensic science research—NIST, the National Institute of Justice (NIJ), and the FBI Laboratory—and NCFS. The committee also organized a concerted effort for statisticians to apply for OSAC positions and has been active in organizing forensic-science–themed sessions for the Joint Statistical Meetings.

NIJ has also asked the ASA forensic science committee for recommendations to serve on its proposal review panels and has reported how much the statistical robustness has improved in proposals to NIJ during the time Greg Ridgeway, a statistician, was its deputy director. In addition, NIST issued a solicitation in 2014 for a Forensic Science Center of Excellence to advance “the wide-spread adoption of probabilistic methods,” a clear nod to the importance of statistics. Indeed, the Center of Excellence was awarded in 2015 to an Iowa State University-led effort for which four of the five principle investigators are statisticians (including Kafadar).

The General Accounting Office also sought the committee’s help to review the statistical components of its 2013–15 review of the FBI’s anthrax investigation in the early 2000s.

As the science of learning from data and characterizing its uncertainty, statistics is essential to strengthening the forensic sciences. Indeed, as stated in the 2010 ASA board statement, “Statisticians are vital to establishing measurement protocols, quantifying uncertainty, designing experiments for testing new protocols or methodologies, and analyzing data from such experiments.” The pieces in this special CHANCE issue help to illustrate these roles.

Statistics and Compositional Analysis of Bullet Lead

In 2004, the National Academy of Sciences (NAS) published a report on the use of Compositional Analysis of Bullet Lead (CABL) in forensic investigations.

The FBI had used CABL since the 1960s in cases where no gun could be recovered, such as in John F. Kennedy’s assassination. A “signature” consisting of three to four trace element concentrations (later expanded to seven elements) was measured on the lead of bullets found both at the crime scene and in the suspect’s possession. The NAS committee that authored the report found two fundamental flaws with the use of CABL to connect the crime scene bullet lead signature to the suspect.

First, the FBI’s statistical procedure consisted of three concentration measurements on each element, from which was calculated the mean plus or minus two standard deviations; if the intervals (mean – 2*standard deviation, mean + 2*standard deviation), calculated on the two bullets (crime scene and suspect) overlapped for all elements, the bullets were claimed to be “analytically indistinguishable.”

In fact, the proper statistical test would be Hotelling’s T2, assuming that the errors in measuring the concentrations are Gaussian and that their correlation matrix can be well estimated. The FBI’s “2-SD-overlap” test was far more generous, in that bullets whose concentrations differed by as much as four standard deviations would be called “analytically indistinguishable” (implying guilt), leading to a higher error rate than the FBI believed existed (based on its nonrandom sample of bullets from its collection of CABL analyses).

Second, the bullet lead manufacturing process was highly consistent; consequently, large populations—of perhaps 1 to 35 million bullets——could be analytically indistinguishable by the FBI’s 2-SD-overlap test, which could end up in thousands of boxes. Hence, while a proper statistical test could be used to test the hypothesis that two bullets came from the same batch, it could not be used to conclude that they came from the same box.

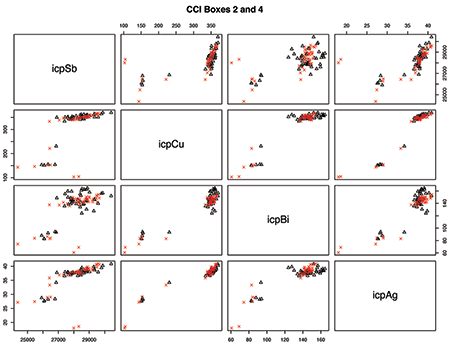

As an example, Figure 1 shows the pairwise concentrations (antimony, Sb; copper, CU; bizmuth, Bi; Silver, Ag) in parts per million (ppm) of 50 bullets in two boxes supplied to the FBI by CCI Ammunition. Among the 50 x 50 = 2,500 comparisons between bullets in Box 2 and Box 4, 1,092 “matches” would be claimed by the FBI procedure.

Using Bayes’ Theorem on the elemental concentrations of the 100 bullets in these two boxes, the probability that any two bullets came from different boxes, given that the FBI’s 2-SD-overlap test claimed match, was 0.47, demonstrating the impossibility of being able to claim that bullets that passed the FBI’s 2-SD-overlap test came from the same box.

Further Reading

Everitt, Brian, and Torsten Hothorn. 2011. An Introduction to Applied Multivariate Analysis with R. New York: Springer.

Meier, Paul, and Sandy Zabell. 1980. “Benjamin Pierce and the Howland Will,” Journal of the American Statistical Association, 75(371), 497–506.

Slack, Charles. 2005. Hetty: The Genius and Madness of America’s First Female Tycoon, New York: Harper Perennial.

Spiegelman, Clifford H., William A. Tobin, William D. James, Simon J. Sheather, Stuart Wexler, and D. Max Roundhill. 2007. “Chemical and forensic analysis of JFK assassination bullet lots: Is a second shooter possible? (PDF download),” Annals of Applied Statistics 1(2): 287–301.

Weir, Bruce S. 2007. “The rarity of DNA profiles (PDF download),” Annals of Applied Statistics 1(2): 258–370.

About the Authors

Steve Pierson earned his PhD in physics from the University of Minnesota. He spent eight years in the Physics Department of Worcester Polytechnic Institute and later became head of government relations at the American Physical Society before joining the ASA as director of science policy.

Karen Kafadar is a leading exponent of exploratory data analysis. Her research also focuses on robust methods, characterization of uncertainty in the physical, chemical, biological, and engineering sciences, and methodology for the analysis of screening trials. She was editor of the Journal of the American Statistical Association‘s Review Section and of Technometrics, and is currently biology and genetics editor of the Annals for Applied Statistics. In addition to her service on the NISS Board of Trustees, she is a past or present member on the governing boards for the ASA, Institute of Mathematical Statistics, and International Statistical Institute. She is a Fellow of the ASA, American Association for the Advancement of Science, and International Statistics Institute, and currently serves on OSAC’s Forensic Science Standards Board.