A Bellman View of Jesse Livermore

Richard Bellman’s Principle of Optimality, formulated in 1957, is the heart of dynamic programming, the mathematical discipline that studies the optimal solution of multi-period decision problems.

In his 1923 book Reminiscences of a Stock Operator, the legendary trader Jesse Livermore gave a detailed account of his methods. In this article we examine some of them—Livermore’s rules for trading—to show how they are directly reflected in Bellman’s Principle, and to demonstrate how in their striving for optimality, two of the greatest minds of the 20th century were neatly aligned.

Bellman’s 1957 book on dynamic programming introduces his conceptual framework for the solution of multi-stage decision processes. While having multiple different mathematical formulations, the problems studied by Bellman all share the following characteristics:

- There is a system characterized at each stage by a set of parameters and state variables.

- At each stage of either process, we have a choice of a number of decisions.

- The effect of a decision is a transformation of the state variables.

- Past history is of no importance in determining future actions.

- The purpose is to maximize a function of the state variables.

In Bellman’s words, a “policy” is any rule for making decisions that yields an allowable sequence of decisions, and an “optimal policy” is one that maximizes a pre-assigned function of the final state variables. For every problem with the above listed properties, Bellman establishes the following rule, his Principle of Optimality: “An optimal policy has the property that whatever the initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision.”

Bellman’s criteria for optimality are also present in the trading process, and because of this the Principle of Optimality should also be applicable to trading. The Principle of Optimality suggests that we study the Q-value matrix describing the value of performing action a in our current state s, and then act optimally henceforth. In this framework, let Q (s, a) denote the set of values available from current states through action a (e.g., interpret s as the agent’s current wealth, and a as a parameterization of a long or short position [or any other action] he initiates).

The current optimal policy and value function are given by

a* (s) := arg maxa Q(s, a)

and V (s) := maxa Q(s, a) = Q(s, a* (s)),

respectively. Let s’ denote the next state of the system, and let r (a, s, s’) be the reward of the next state s’ given current state s and action a.

We would like to put the just introduced definitions into a sequential context. We will consider the path of a single market where trading takes place at discrete times. Time t = 0 corresponds to the current time. The realized current state will be denoted by st , the current action by at , a future unobserved state by St+1, and optimal policy and value functions are defined by

a* (st) = arg maxa Qt+1 (st, a)

and V (st) = Qt+1 (st , a*(t)), respectively.

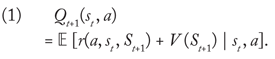

The Principle of Optimality now provides the key sequential identity of dynamic programming, namely that

In Bellman’s words, whatever today’s state st, and whatever today’s decision a, today’s value Qt+1 (st, a) is based on (expected) optimal decisionmaking with regard to the next state St+1 which results from today’s state st and decision a.

To find today’s optimal action, one has to solve the equilibrium condition (1) for the Q-matrix, and then read off the optimal action a*(st) that attains arg maxa Q (st,a). For simplicity, we assume that a ∈ A takes only a finite set of possible values.

(Equations (1) and (2) also allow for inclusion of a discounting factor, which, if required, should be incorporated in r (a, st, St+1).)

However, in reality there’s a caveat: to evaluate (1) in his decisionmaking, as the real world probabilities are unknown to him, the trader has to take expectations under his subjective probability distribution q (St |st, a), which describes his beliefs about the future path of state variables depending on his current wealth st and his action a.

Thus, instead of (1), the trader will attempt to solve

where 𝔼q[·] and Vq(·) denote probabilities taken with respect to the distribution q (St+1|st, a) of the trader’s beliefs.

Within the Bellman and Livermore optimal framework, we note a number of compelling features, which we summarize in the following remarks.

Remark 1.1. It is surprising how little effect the distinction between (1) and (2) has on the actual trading process.

Remark 1.2. Rules based on deviations between realized market prices and a trader’s expectations have little place in assessing the optimal action a*: arguments such as “sell because prices went higher than my expectations” do not enter the picture, which is a version of Livermore’s maxim that “the market is never wrong.” Put simply, we should only worry about the optimal Bellman path of actions, or how we got there, rather to act optimally from here on out.

Remark 1.3. A large part of the Bellman and Livermore optimal policy insight is that the trader’s subjective beliefs (2) must be updated conditionally on observed market prices. Because the market has a superior information set when prices rise, the optimal action is to do nothing. At this time, in Livermore’s words, “One should hope, not fear.” When prices fall, however, “One should fear, not hope,” according to Livermore, and one should think of selling.

We will look at the importance of the remarks in detail in the next section.

2. Trading Principles

In the following sections, we will discuss two of Jesse Livermore’s main trading rules, hoping to provide a modest insight into his trading principles.

2.1 Profits take care of themselves, losses never do.

Suppose there are only two possible market positions, long and neutral, denoted by aL and aN, respectively. Short will be the reflection of long.

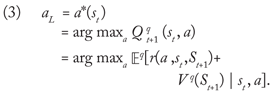

Suppose a trader initiates a long position aL at time t because, given his current wealth st, he observes

But, suppose that at time t + 1, he finds that r (a, st, st+1) < 0, and that his new wealth now is St+1 < st. Then the trader has to evaluate whether to close his position (i.e., action aN), or whether to hold on to his position (i.e., action aL).

If we assume that the decision whether to be in or out of the market is independent of the current wealth level St+1, then

We observe that, if acting under an unchanged subjective probability

q = q (St+1 | st, a),

then the trader will proceed with aL, since

as before.

However, if we define

for a ∈ {aL, aN}, then the trader now supplements his rationale (i.e., his subjective probability q) by the information contained in the most recent price move xt+1.

If we denote the trader’s updated views by q ∪ {xt+1}, then, by a symmetry argument, we get that

where, by assumption,

r (aL, st, St+1) < 0 = r (aN, st,, St+1).

We observe that in absence of other external information besides the price move xt+1, and being consistent with his former rationale, the trader should take a neutral action and exit his position.

In reality, due to cost of trading, the trader’s reaction will not be immediate. However, the implication of the principle of optimality is that the only thing of concern is selling the losing position.

The reflection of the above argument, in the case where r (aL, st, St+1) >: 0, shows that next period’s optimal action is aL, the same as the current period. The winners take care of themselves.

In Livermore’s words:

Profits always take care of themselves, but losses never do. The speculator has to insure himself against considerable losses by taking the first small loss. In doing so, he keeps his account in order, so that, at some future time, when he has a constructive idea, he will be in a position to go into another deal, taking the same amount of stock he had when he was wrong.

We’ll now look at a brief example, putting the just introduced concepts into practice.

Example. Consider a trader, Jan, who is investing in Google (GOOG) shares. Suppose Jan’s only counter-party is a broker called Theobald-Fritz, but whom we will nickname Hermes. (In classic Greek Mythology, Hermes is the patron of travelers, herdsmen, poets, athletes, inventors, traders, and thieves. The role played by Hermes here also strongly resembles Benjamin Graham’s 1949 creation of Mr. Market, which is intentional.)

Suppose Jan has just purchased GOOG shares worth $1,000 from Hermes (i.e., we have st = $1,000). Suppose Jan trades with Hermes daily, and every time Jan trades, he invests exactly $1,000 (independent of his net wealth), taking his profit/loss for the trade on the following day. Suppose further that the daily price movements of the GOOG shares are exactly ±1%, where p and 1–p are the real-world probabilities for an up or down move, respectively.

Define u := $10 and d := $10, and denote the decision to take a long (short) position by aL (by aS). As p is unknown to him, Jan has to make his trading decision based on his personal beliefs 1 >q, 1–q > 0. According to Jan’s own estimate, q >0.5, and

Therefore, Jan is very happy with his newly purchased share of GOOG equity.

Suppose, in reality, p < 0.5, and the following day, Jan checks with Hermes just to find that St+1 = st–d = $990 and r (aL, st, st – d) = –$10 < 0.

Naturally, Jan is somewhat unimpressed with his results, and he initially blames Hermes. But Jan has to make a decision: Should he increase his GOOG equity holding following aL?

Jan’s personal estimate, q > 0.5, is unchanged, so he is tempted to stay long and buy more. However, having carefully read Bellman’s and Livermore’s books, Jan is also blissfully aware that this would not be in accordance with the optimal Bellman policy—which would have returned r (aS, st, st + d) = $10 > 0 up to this point. Realizing that increasing his position would inadvertently deviate from the optimal path, Jan decides to liquidate his position.

Of course, had Jan been profitable with aL, the reverse argument would have held, and he would have kept his GOOG equity, enjoying the ride on the optimal Bellman trajectory and avoiding any disputes with Hermes.

2.2 Don’t average down.

Averaging down is the practice of increasing one’s position after taking a loss, in the hope of reaping the expected profit and recovering all previous losses.

Strictly speaking, averaging down is already prohibited if losing positions are exited, which we covered in Section 2.1; however, the strategy is so popular that it warrants separate consideration.

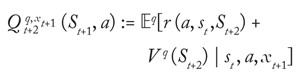

Using the notation of Section 2.1, suppose again that, based on (3), our trader holds a long position aL at time t, and that at time t + 1 he finds that r (aL, st, St+1) < 0, and that his new wealth now is St+1 < st. If our trader thinks that an increased long position is in order, then clearly he must think that

where q’ denotes his updated personal probabilities. But, in absence of other external information besides the price move xt+1, we have q’ = q ∪ {xt+1}, and, as already seen in Section 2.1, (3) then implies

which means that (4) cannot be true.

The contradiction between (4) and (5) is an interesting one, and slightly exceeds the implications of Section 2.1. As acting based on q’ = qt ∪{xt+1} leads to (5), we see that averaging down can only ever be justified if the trader believes he has obtained a new set of external information, exceeding what was learned from the latest price move xt+1, and dominating the price—a very rare case indeed. Generally, doubling up on a losing position is irrational.

As Livermore also wrote:

One other point: it is foolhardy to make a second trade if your first trade shows you a loss. Never average losses. Let that thought be written indelibly upon your mind.

I have warned against averaging losses. That is a most common practice. Great numbers of people will buy a stock, let us say at $50, and two or three days later if they can buy at $47 they are seized with the urge to average down. […] If one is to apply such an unsound principle, he should keep on averaging by buying 200 shares at $44, then 400 at $41, 800 at $38, 1600 at $35, 3200 at $32, 6,400 at $29 and so on. How many speculators could stand such pressure? Yet if the policy is sound it should not be abandoned. Of course, abnormal moves such as the one indicated do not happen often. But it is just such abnormal moves that the speculator must guard against to avoid disaster.

So, at the risk of repetition, let me urge you to avoid averaging down. […] Why send good money after bad? Keep that good money for another day. Risk it on something more attractive than an obviously losing deal.

Remark 2.1. An immediate corollary to Section 2.2 is that trading on the belief of a “true” value is dangerous. Almost always, the true value is established once, and then convergence is waited for. Adverse movements are interpreted as providing better entry points, and are believed to strengthen the opportunity. Clearly, any such reasoning compounds the conflict between (4) and (5) several fold and should be strictly avoided.

Remark 2.2. It is helpful to add that Livermore’s original writings were independent of any specific market structure, but were presented to hold in generality for any market. Similarly, Bellman’s Principle of Optimality applies to any multi-period decisionmaking process. Therefore, the Bellman and Livermore optimal policy insight presented in this article applies to any financial transaction that takes place within an exogenously given market of any form.

Conclusion

Economist John Kenneth Galbraith famously wrote, “Faced with the choice between changing one’s mind and proving that there is no need to do so, almost everyone gets busy on the proof.” In this article, we’ve shown that within the Bellman and Livermore optimal policy insight every view held should be updated based on the latest available information; an unwillingness to learn, as Galbraith suggests, is expected to be detrimental.

Finally, we summarize the Bellman and Livermore optimal policy insights as follows:

1. Do not trust your own opinion and back your judgment until the action of the market itself confirms your opinion.

2. Markets are never wrong—opinions often are.

3. The real money made in speculating has been in commitments showing in profit right from the start.

4. As long as a stock is acting right, and the market is right, do not be in a hurry to take profits.

5. The money lost by speculation alone is small compared with the gigantic sums lost by so-called investors who have let their investments ride.

6. Never buy a stock because it has had a big decline from its previous high.

7. Never sell a stock because it seems high-priced.

8. Never average losses.

9. Big movements take time to develop.

10. It is not good to be too curious about all the reasons behind price movements.

Further Reading

Bellman, Richard E. 1957. Dynamic programming. Princeton, N.J.: Princeton University Press.

Graham, Benjamin. 1949. The intelligent investor: A book of practical counsel. HarperBusiness.

Livermore, Jesse L. 1923. Reminiscences of a stock operator. New York: John Wiley & Sons.

Livermore, Jesse L. 1940. How to trade in stocks. New York: John Wiley & Sons.

About the Authors

Nick Polson is a Bayesian statistician who conducts research on financial econometrics, Markov chain Monte Carlo, particle learning, and Bayesian inference. Inspired by an interest in probability, Polson has developed a number of new algorithms and applied them to statistics and financial econometrics, including the Bayesian analysis of stochastic volatility models and sequential particle learning for statistical inference.

Jan Hendrik Witte is a numerical analyst who has developed a number of new algorithms in the area of numerical optimal stochastic control. Witte is generally interested in the areas of numerical mathematical finance, systematic trading, and portfolio optimization.